Google offers an AI image classification tool that analyzes images to classify the content and assign labels to them.

The tool is intended as a demonstration of Google Vision, which can scale image classification on an automated basis but can be used as a standalone tool to see how an image detection algorithm views your images and what they’re relevant for.

Even if you don’t use the Google Vision API to scale image detection and classification, the tool provides an interesting view into what Google’s image-related algorithms are capable of, which makes it interesting to upload images to see how Google’s Vision algorithm classifies them.

This tool demonstrates Google’s AI and Machine Learning algorithms for understanding images.

It’s a part of Google’s Cloud Vision API suite that offers vision machine learning models for apps and websites.

Does Cloud Vision Tool Reflect Google’s Algorithm?

This is just a machine learning model and not a ranking algorithm.

So, it is unrealistic to use this tool and expect it to reflect something about Google’s image ranking algorithm.

However, it is a great tool for understanding how Google’s AI and Machine Learning algorithms can understand images, and it will offer an educational insight into how advanced today’s vision-related algorithms are.

The information provided by this tool can be used to understand how a machine might understand what an image is about and possibly provide an idea of how accurately that image fits the overall topic of a webpage.

Why Is An Image Classification Tool Useful?

Images can play an important role in search visibility and CTR from the various ways that webpage content is surfaced across Google.

Potential site visitors who are researching a topic use images to navigate to the right content.

Thus, using attractive images that are relevant for search queries can, within certain contexts, be helpful for quickly communicating that a webpage is relevant to what a person is searching for.

The Google Vision tool provides a way to understand how an algorithm may view and classify an image in terms of what is in the image.

Google’s guidelines for image SEO recommend:

“High-quality photos appeal to users more than blurry, unclear images. Also, sharp images are more appealing to users in the result thumbnail and increase the likelihood of getting traffic from users.”

If the Vision tool is having trouble identifying what the image is about, then that may be a signal that potential site visitors may also be having the same issues and deciding to not visit the site.

What Is The Google Image Tool?

The tool is a way to demo Google’s Cloud Vision API.

The Cloud Vision API is a service that lets apps and websites connect to the machine learning tool, providing image analysis services that can be scaled.

The standalone tool itself allows you to upload an image, and it tells you how Google’s machine learning algorithm interprets it.

Google’s Cloud Vision page describes how the service can be used like this:

“Cloud Vision allows developers to easily integrate vision detection features within applications, including image labeling, face and landmark detection, optical character recognition (OCR), and tagging of explicit content.”

These are five ways Google’s image analysis tools classify uploaded images:

- Faces.

- Objects.

- Labels.

- Properties.

- Safe Search.

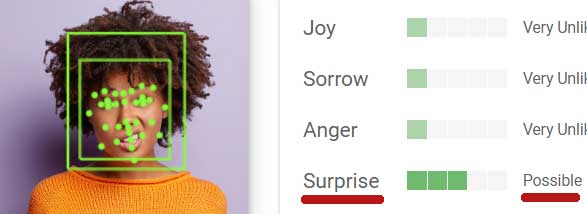

Faces

The “faces” tab provides an analysis of the emotion expressed by the image.

The accuracy of this result is fairly accurate.

The below image is a person described as confused, but that’s not really an emotion.

The AI describes the emotion expressed in the face as surprised, with a 96% confidence score.

Composite image created by author, July 2022; images sourced from Google Cloud Vision API and Shutterstock/Cast Of Thousands

Composite image created by author, July 2022; images sourced from Google Cloud Vision API and Shutterstock/Cast Of ThousandsObjects

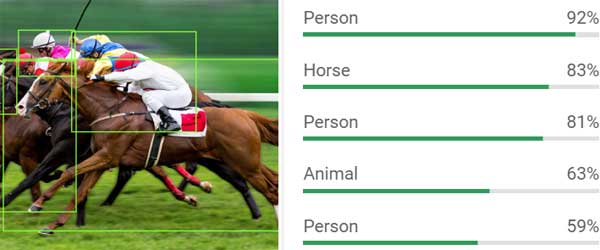

The “objects” tab shows what objects are in the image, like glasses, person, etc.

The tool accurately identifies horses and people.

Composite image created by author, July 2022; images sourced from Google Cloud Vision API and Shutterstock/Lukas Gojda

Composite image created by author, July 2022; images sourced from Google Cloud Vision API and Shutterstock/Lukas GojdaLabels

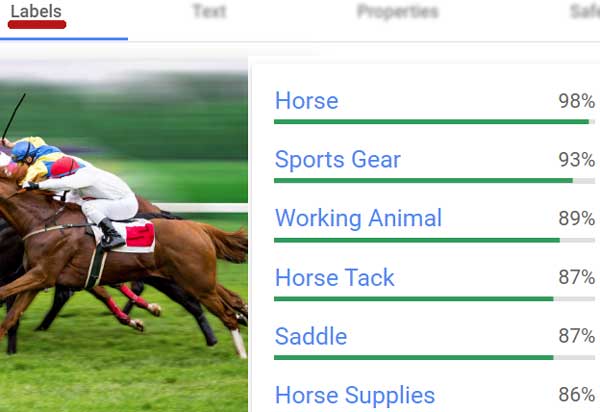

The “labels” tab shows details about the image that Google recognizes, like ears and mouth but also conceptual aspects like portrait and photography.

This is particularly interesting because it shows how deeply Google’s image AI can understand what is in an image.

Composite image created by author, July 2022; images sourced from Google Cloud Vision API and Shutterstock/Lukas Gojda

Composite image created by author, July 2022; images sourced from Google Cloud Vision API and Shutterstock/Lukas GojdaDoes Google use that as part of the ranking algorithm? That’s something that is not known.

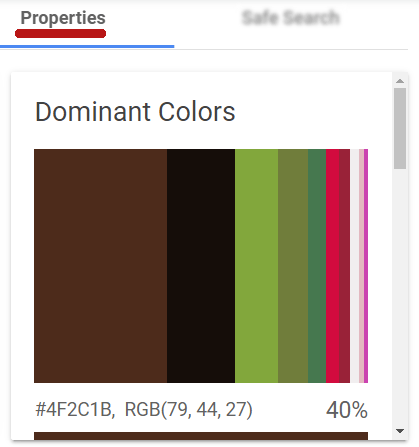

Properties

Properties are the colors used in the image.

Screenshot from Google Cloud Vision API, July 2022

Screenshot from Google Cloud Vision API, July 2022On the surface, the point of this tool isn’t obvious and may seem like it is somewhat without utility.

But in reality, the colors of an image can be very important, particularly for a featured image.

Images that contain a very wide range of colors can be an indication of a poorly-chosen image with a bloated size, which is something to look out for.

Another useful insight about images and color is that images with a darker color range tend to result in larger image files.

In terms of SEO, the Property section may be useful for identifying images across an entire website that can be swapped out for ones that are less bloated in size.

Also, color ranges for featured images that are muted or even grayscale might be something to look out for because featured images that lack vivid colors tend to not pop out on social media, Google Discover, and Google News.

For example, featured images that are vivid can be easily scanned and possibly receive a higher click-through rate (CTR) when shown in the search results or in Google Discover, since they call out to the eye better than images that are muted and fade into the background.

There are many variables that can affect the CTR performance of images, but this provides a way to scale up the process of auditing the images of an entire website.

eBay conducted a study of product images and CTR and discovered that images with lighter background colors tended to have a higher CTR.

The eBay researchers noted:

“In this paper, we find that the product image features can have an impact on user search behavior.

We find that some image features have correlation with CTR in a product search engine and that that these features can help in modeling click through rate for shopping search applications.

This study can provide sellers with an incentive to submit better images for products that they sell.”

Anecdotally, the use of vivid colors for featured images might be helpful for increasing the CTR for sites that depend on traffic from Google Discover and Google News.

Obviously, there are many factors that impact the CTR from Google Discover and Google News. But an image that stands out from the others may be helpful.

So for that reason, using the Vision tool to understand the colors used can be helpful for a scaled audit of images.

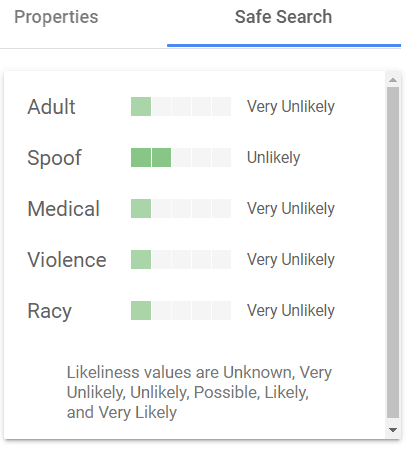

Safe Search

Safe Search shows how the image ranks for unsafe content. The descriptions of potentially unsafe images are as follows:

- Adult.

- Spoof.

- Medical.

- Violence.

- Racy.

Google search has filters that evaluate a webpage for unsafe or inappropriate content.

So for that reason, the Safe Search section of the tool is very important because, if an image unintentionally triggers a safe search filter, then the webpage may fail to rank for potential site visitors who are looking for the content on the webpage.

Screenshot from Google Cloud Vision API, July 2022

Screenshot from Google Cloud Vision API, July 2022The above screenshot shows the evaluation of a photo of racehorses on a race track. The tool accurately identifies that there is no medical or adult content in the image.

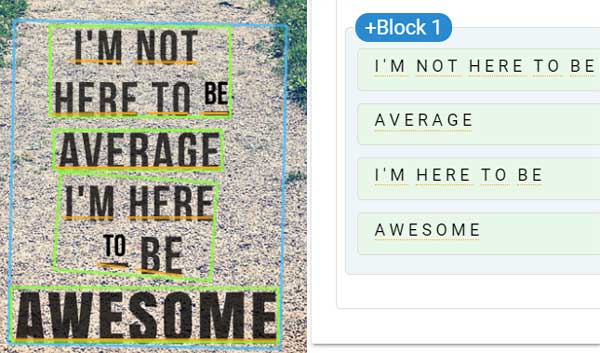

Text: Optical Character Recognition (OCR)

Google Vision has a remarkable ability to read text that is in a photograph.

The Vision tool is able to accurately read the text in the below image:

Composite image created by author, July 2022; images sourced from Google Cloud Vision API and Shutterstock/Melissa King

Composite image created by author, July 2022; images sourced from Google Cloud Vision API and Shutterstock/Melissa KingAs can be seen above, Google does have the ability (through Optical Character Recognition, a.k.a. OCR), to read words in images.

However, that’s not an indication that Google uses OCR for search ranking purposes.

The fact is that Google recommends the use of words around images to help it understand what an image is about and it may be the case that even for images with text within them, Google still depends on the words surrounding the image to understand what the image is about and relevant for.

Google’s guidelines on image SEO repeatedly stress using words to provide context for images.

“By adding more context around images, results can become much more useful, which can lead to higher quality traffic to your site.

…Whenever possible, place images near relevant text.

…Google extracts information about the subject matter of the image from the content of the page…

…Google uses alt text along with computer vision algorithms and the contents of the page to understand the subject matter of the image.”

It’s very clear from Google’s documentation that Google depends on the context of the text around images for understanding what the image is about.

Takeaway

Google’s Vision AI tool offers a way to test drive Google’s Vision AI so that a publisher can connect to it via an API and use it to scale image classification and extract data for use within the site.

But, it also provides an insight into how far algorithms for image labeling, annotation, and optical character recognition have come along.

Upload an image here to see how it is classified, and if a machine sees it the same way that you do.

More Resources:

Featured image by Maksim Shmeljov/Shutterstock