Google’s official algorithm updates often present unique opportunities.

Knowing that Google has made an algorithmic change can present the chance to better understand the search ecosystem. (That’s not to minimize the stress that can come with an algorithm update.)

Simply put, these moments can, at times, help to illuminate “what’s changed” for SEO.

What is Google able to do algorithmically that it couldn’t before? What does it now prefer that in the past it didn’t?

While there are no absolute answers to these questions, in certain moments, it’s possible to peek behind the curtain.

Here’s a glimpse into a few of the things I saw as a consequence of Google’s February 2023 Product Review Update.

A Bird’s Eye View Analysis On The February 2023 Product Review Update

I’ve always found analyzing Google’s updates to be fascinating, especially when diving into, not only the domain-level trends, but also what’s happening on individual search engine results pages (SERPs).

In the case of the February 2023 Product Review Update (PRU), I almost didn’t write this post. Many of the insights and patterns I saw were relatively similar to some of the analyses I presented around previous core updates and Product Review Updates (PRU).

There was, however, an interesting little pattern that I saw that made me change my mind.

Let me just say that what I present here is far from concretized conclusions. The patterns and analysis I present are exactly that – my best analysis using the facts I had in front of me.

At the same time, even if you should agree with my analysis, it is just but one sliver of the entire picture. There were many patterns I saw when diving into the February 2023 PRU.

Again, some I found a bit repetitive and didn’t feel the need to write up again. No one can really see the algorithmic picture in its entirety.

Caveats aside, one thing that stuck out to me in the pages that I looked at was Google’s tendency to reward pages based on “how” they spoke to their audience. Specifically, whether they used generic and abstract tones or if they used more personal verbiage.

When looking at some of the specific pages I analyzed, it seemed as though there was a bit of a trend concerning pages that incorporated language indicating firsthand experience being preferred by the search engine.

While that alone is not worthy of having a page rank, I did see more than a few examples of pages rewarded by the update using language, such as “We particularly loved…” or “I’ve had it (the product) for around five years…”

To me, it seemed as if Google has become a bit more aware of language structure as it pertains to reflecting firsthand experiences of products.

Is this simple conjecture on my part? You be the judge.

Here are just two cases as a sample of what I saw and why I think Google is profiling language to determine the likelihood of actual product usage during the review process.

Deep-Dive Analysis Of Google’s February 2023 Product Review Update

Let’s get into this.

What I did was take some Semrush data I have access to in order to determine ranking trends for a given keyword.

The upshot here is I can see which pages Google rewarded and which pages Google demoted at the hands of the update.

From there, I can jump into what’s happening at both the domain level and the page level – in terms of how the page ranks overall and, most importantly, what the page is doing with its content.

Case #1: Rankings For ‘Laser All In One Printer’

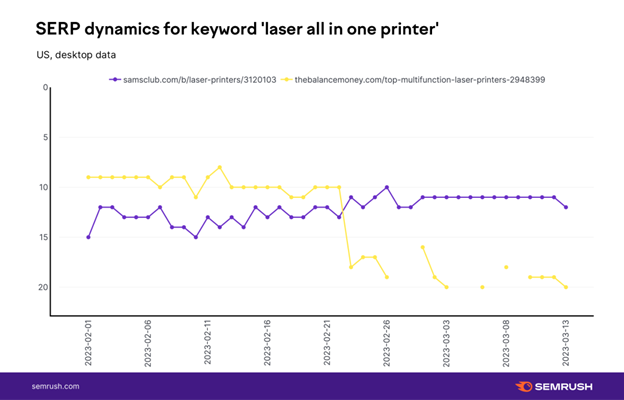

Screenshot from Semrush, March 2023

Screenshot from Semrush, March 2023In the screenshot above, a page from The Balance went from ranking consistently at circa position 8 on the SERP to falling to position 20.

Looking at the page’s performance, we can see that the update had a noticeable impact on it across the board, not just for this particular keyword.

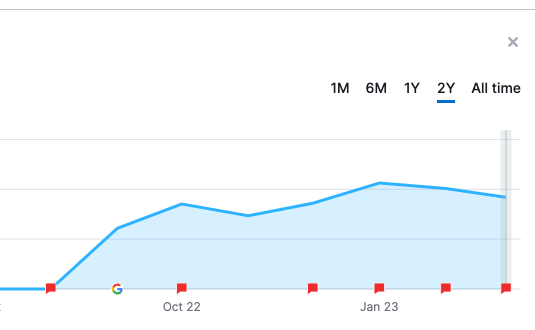

Screenshot by author, March 2023

Screenshot by author, March 2023Organic performance for The Balance shows the domain to have been impacted by the February 2023 Product Review Update.

At first glance, I thought this must be because the page simply added “2023” to the title tag and H1, along with updating the publish date.

A look at the Wayback Machine showed not a single word had changed in the course of a year, yet “2023” was spattered everywhere.

While I would speculate Google could (should it want to) be able to easily spot something like this, I noticed that the top-ranking product review page did exactly the same thing.

So, I decided to dive a little bit deeper. By that, I mean I went to the nature and quality (as I saw it) of the content itself.

Here, the content isn’t “bad,” and it’s not the like the page doesn’t rank at all – but the content is very generic.

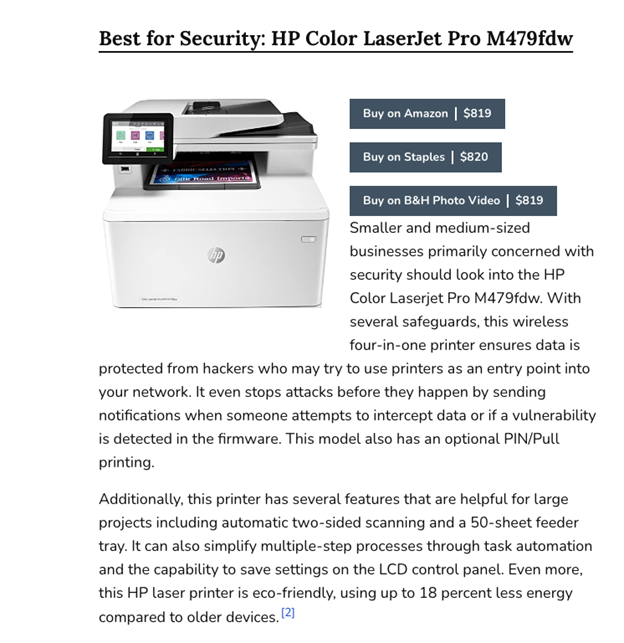

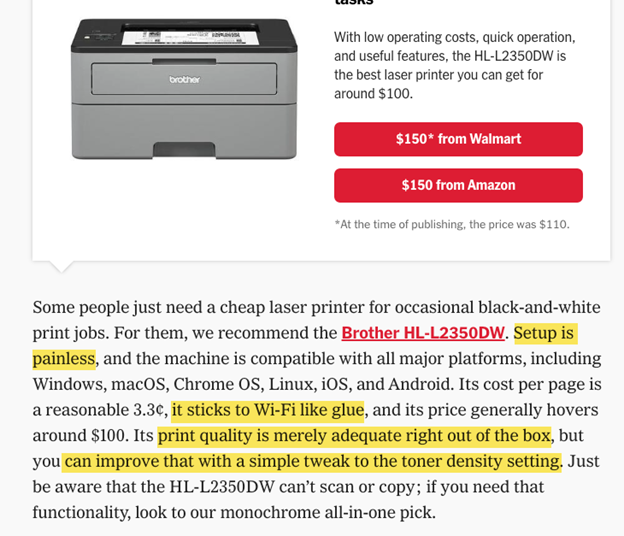

Screenshot from The Balance, March 2023

Screenshot from The Balance, March 2023Let me put it this way: Spend a second reading some of the content in the image above. Now ask yourself: Could you have gotten this information from the product’s user manual?

The answer is “Yes, essentially.” The content here is generic. And while it may work for some keywords or in scenarios where there is not a lot of great content to compete with, it isn’t strong enough for this SERP.

Part of the reason is simply because of intent. Google doesn’t need to rank eight or nine product review pages for this query.

In fact, when I looked, it only ranks four product review pages among the initial nine organic results. That’s not a lot of top-ranking space for this type of page.

This means that the slots available for a product review on the SERP itself up the level of competition for ranking.

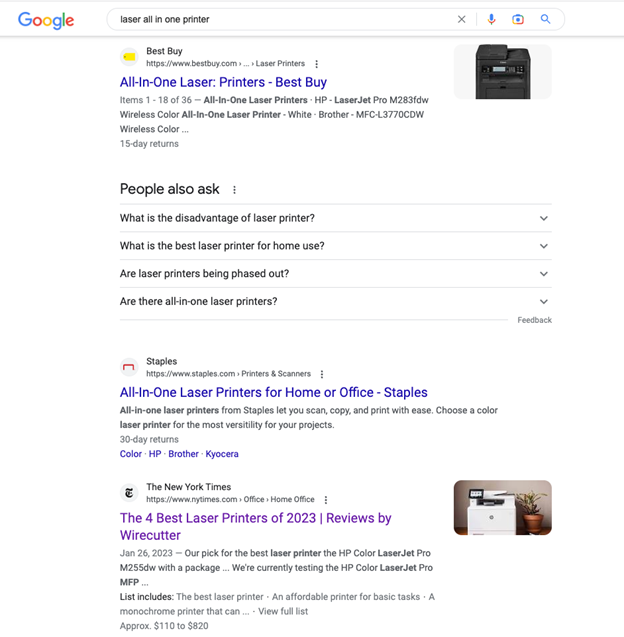

Screenshot from search for [laser all in one printer], Google, March 2023

Screenshot from search for [laser all in one printer], Google, March 2023The other reason this page isn’t strong enough to rank, in my opinion, is what its top competitor is doing.

Let’s dive into how the top-ranking product review page from Wirecutter handles the content.

To start, the information it offers would have to, all things being equal, come from some sort of intimate knowledge of the product and could not have been pulled from a user manual.

Screenshot from nytimes.com/wirecutter, March 2023

Screenshot from nytimes.com/wirecutter, March 2023The page’s content attempts to take the product from the user’s point of view by addressing the considerations when using the product in question. That already separates the page from the more generic content I showed earlier.

It also aligns with Google’s guidance where Google says to “Evaluate the product from a user’s perspective.”

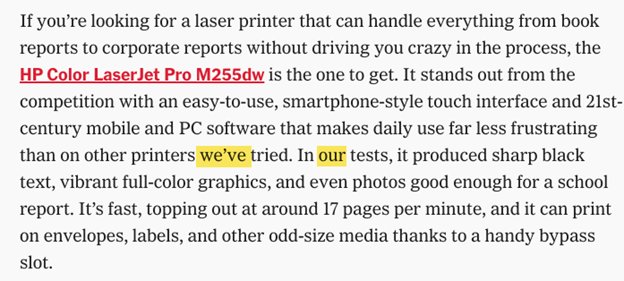

The second thing that stood out to me was the spattering of first-person (plural) language. There is a lot of “we” and “our” placed throughout the page.

Screenshot from nytimes.com/wirecutter, March 2023

Screenshot from nytimes.com/wirecutter, March 2023In other words, the language structure of the page via the pronouns used (among other things) would seemingly indicate firsthand experience with the product.

While there are many differences between the two pages here, and while I am certain there is more to the ranking scenario than what I am presenting, I cannot, at the same time, deny the stark difference in approach these two pages take.

One page offers a cold and generic approach to reviewing the product. The other – in both usages of specific pronouns but even more so in its more robust, complex, and overall personal language structure – strongly indicates firsthand product familiarity.

Is Google now better able to detect and, therefore, reward this?

Let’s explore one more case to better make that conclusion.

Case #2: Rankings For ‘Portable Dog Carrier’

Screenshot from Semrush, March 2023

Screenshot from Semrush, March 2023If all I had seen were a case or two where a site/page that used generic content could have been pulled from a user manual to lose rankings during the update, I would have not written this post.

What spurred me on was seeing a good few cases where it seemed as if Google was purposefully rewarding content whose language structure indicated firsthand experience.

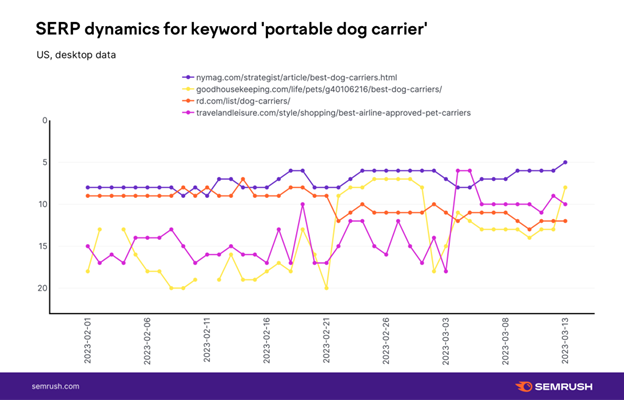

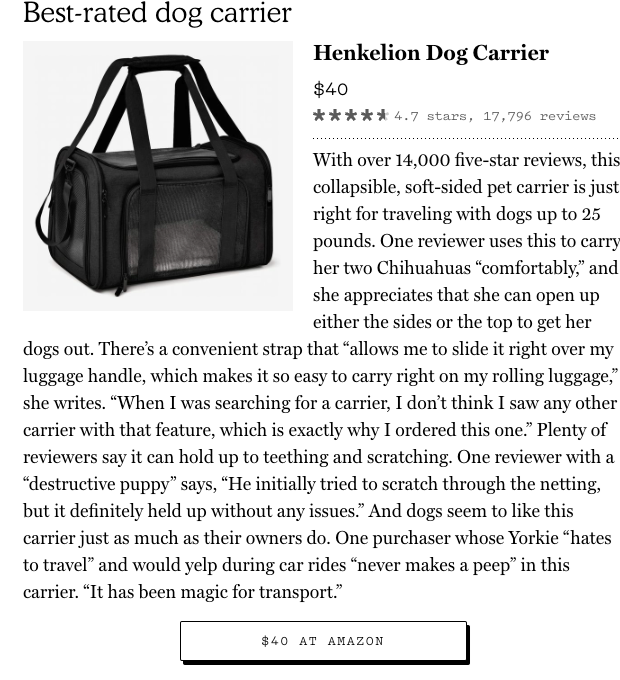

The most vivid case of this was for the keyword “portable dog carrier.”

Here, a page from Readers Digest went from ranking ~8 on the SERP to the top of “page 2.”

At the same time, pages from Travel and Leisure and Good Housekeeping moved from position ~15 to position ~10 and from position ~20 to position ~12, respectively.

The Strategist also saw a modest increase going from position ~8 to position ~6.

Again, with those sorts of things identifying “one true reason” to explain the rank behavior is not the way to go – it’s most likely a whole heap of things that aggregate together.

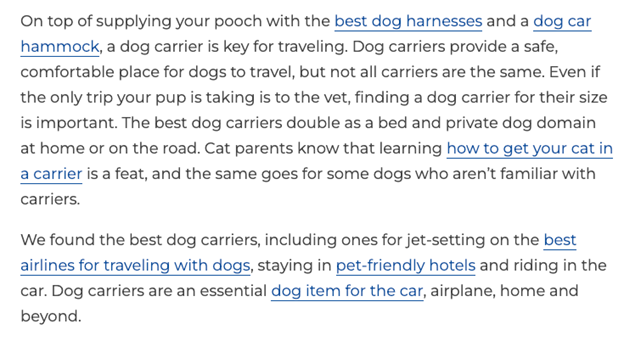

I speculate that one of those things was how the Reader’s Digest page went about internal linking to other articles and product review pages. I know internal linking is the great wonder of SEO, but in this case, it really felt very “commercial.”

Screenshot from rd.com, March 2023

Screenshot from rd.com, March 2023To me, it’s almost as if this paragraph was written so that they could stuff as many internal links as possible. This leaves you to wonder if the content is really “helpful” in the end.

Internal linking practices aside, I think the page suffers the same problem as what we saw in regard to printers above. Namely, the content is too generic.

Screenshot from rd.com, March 2023

Screenshot from rd.com, March 2023Again, could you not have read a user manual and written the very same paragraph? Probably.

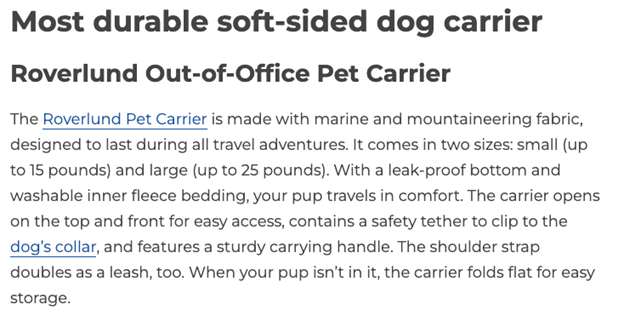

Even the pros and cons sections are a little too generic for my liking:

Screenshot from rd.com, March 2023

Screenshot from rd.com, March 2023Here too, I feel I could read the box, look at a few images online, and come up with this list of pros and cons.

And it’s not just for this keyword; it would seem Google was not fond of the page any longer as a whole.

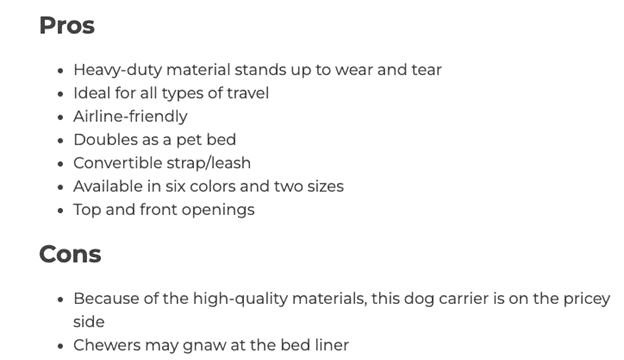

Screenshot from Semrush, March 2023

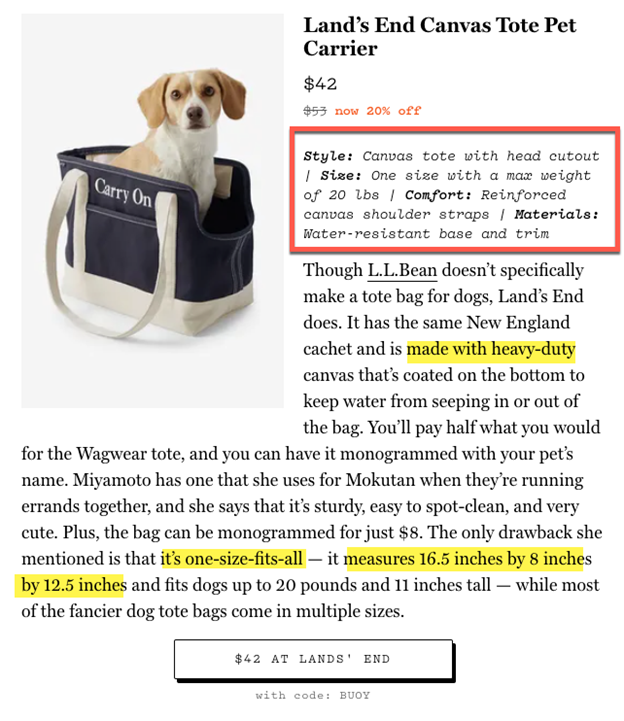

Screenshot from Semrush, March 2023Let’s compare this with the pages that, even though they don’t rank at the very top of the SERP, did see a ranking boost attributed to the February 2023 PRU – both of which are doing some really interesting things that speak to the actual product experience.

Let’s start with the Good Housekeeping page, which saw a boost with the PRU across multiple keywords relevant to the page.

(It seems that the March 2023 Core Update has reversed some of those gains, though at the time of this writing – that update is still in its infancy. Parenthetically, this speaks to, perhaps, a need voiced by Glenn Gabe to unify these updates).

Screenshot from Semrush, March 2023

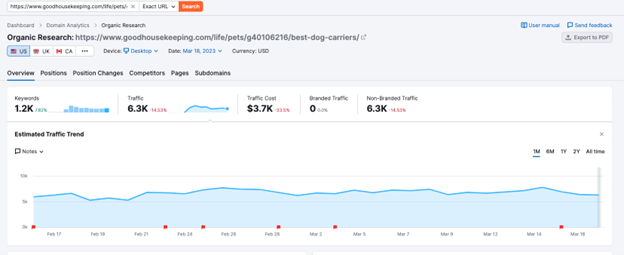

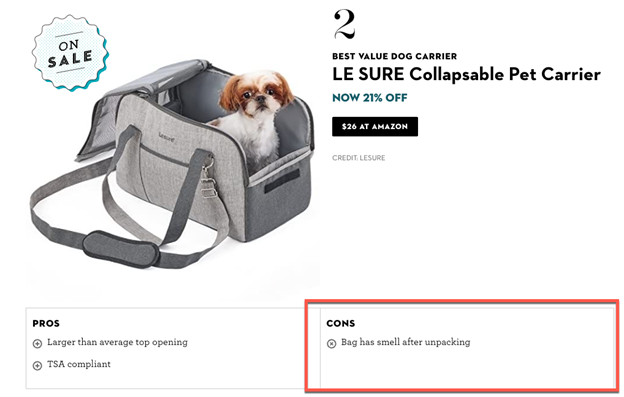

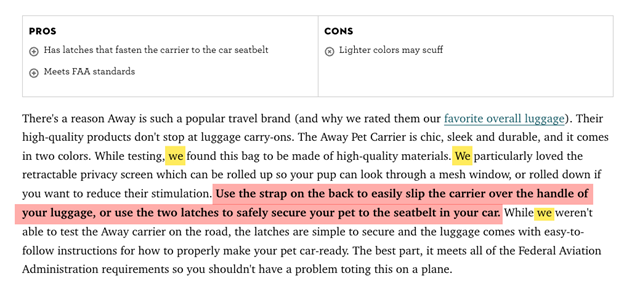

Screenshot from Semrush, March 2023In contrast to the Reader’s Digest page, the pros and cons listed here speak directly to the reviewers’ personal experience with the product.

I mean, other than lying or reading it online somewhere, how would they know the product smells bad when unboxed unless the author unboxed the product?

Screenshot from GoodHousekeeping.com, March 2023

Screenshot from GoodHousekeeping.com, March 2023Furthermore, the page not only employs a healthy dose of first-person pronouns, but each product description offers the reader a piece of advice that is clearly intended to show you that the site actually used the product in question.

Screenshot from GoodHousekeeping.com, March 2023

Screenshot from GoodHousekeeping.com, March 2023So, while pronoun usage is a clear way to attempt to show firsthand experience as a reader (as opposed to a bot), the more obvious sign was the inclusion of tips that rely on intricate familiarity with the product.

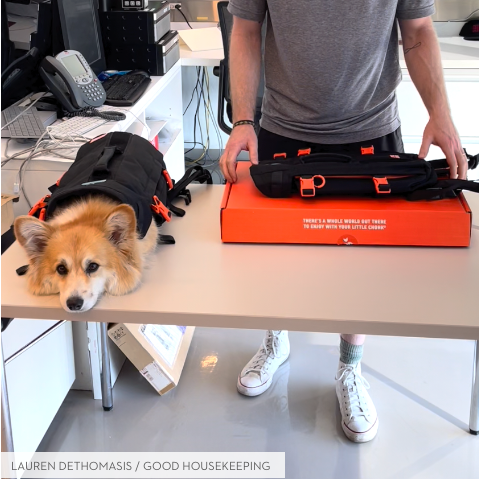

Lastly, and again unlike the Reader’s Digest page, the site did not rely on only stock and manufacturer photos. Rather, in certain instances, real photos from the site’s staff show the products with actual animals.

Screenshot from GoodHousekeeping.com, March 2023

Screenshot from GoodHousekeeping.com, March 2023This is literally right from Google’s guidance where they say:

“Provide evidence such as visuals, audio, or other links of your own experience with the product, to support your expertise and reinforce the authenticity of your review”

That’s not to say the page is perfect. I felt there could have been a little bit more by way of describing the product itself just personally. The point here is another case of a site deliberately trying to showcase firsthand product experience.

The same is true for the Travel and Leisure page.

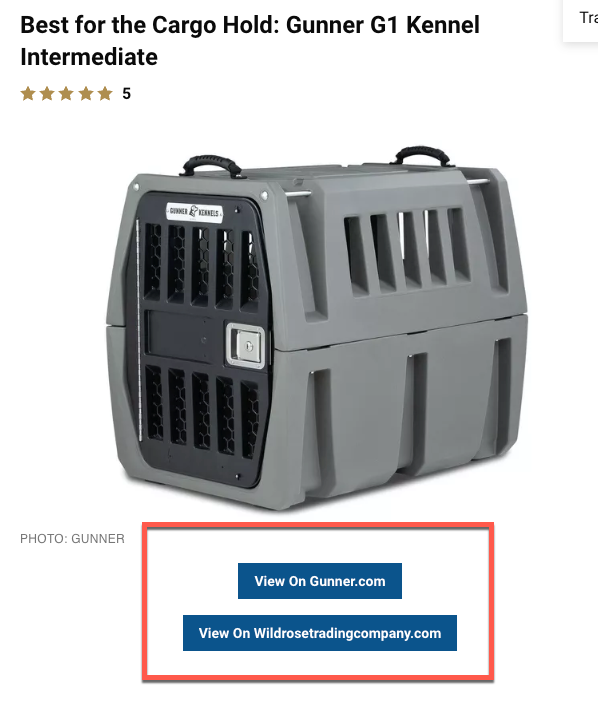

For starters, the page sometimes presents more than one seller concerning a product recommendation.

Screenshot from TravelandLeisure.com, March 2023

Screenshot from TravelandLeisure.com, March 2023This, again, is straight from Google’s guidance:

“Consider including links to multiple sellers to give the reader the option to purchase from their merchant of choice.”

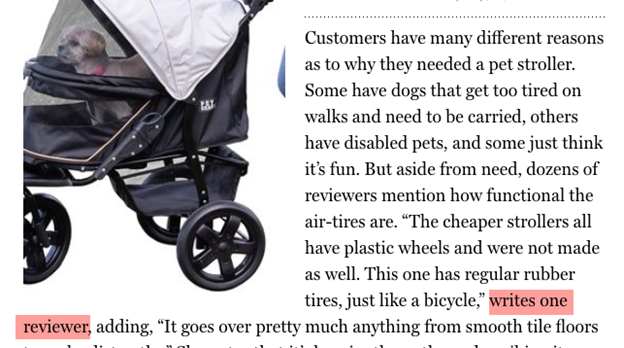

Also, like the Good Housekeeping page (but to an even greater extent), the page uses photos of the actual product with animals that were taken by the site’s staff.

Screenshot from TravelandLeisure.com, March 2023

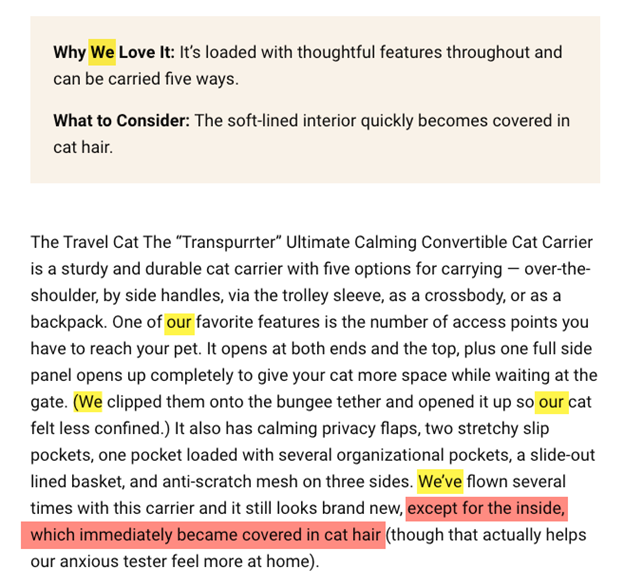

Screenshot from TravelandLeisure.com, March 2023Of course, as you might imagine at this point, there is a healthy dose of first-person pronouns used in the review content itself.

Screenshot from TravelandLeisure.com, March 2023

Screenshot from TravelandLeisure.com, March 2023However, what really stood out to me was how Travel and Leisure qualified its statements.

One of the things I’ve speculated about ever since Google started emphasizing firsthand experience as part of its PRU was the profiling of language structure.

That is, I had speculated that Google was using machine learning to profile language structure that does and doesn’t support the content being written by someone with firsthand experience.

It’s not a wild notion. It’s literally what machine learning is designed to do. It’s also not ridiculous when you compare the language structure itself.

You tell me – if I was reviewing a vacuum cleaner, which sentence would indicate I actually used it?

- Great vacuum for carpet.

- Great vacuum for carpet, but had a hard time with pet hair.

Obviously, the second sentence would seem to imply I actually used the product. There’s a lot to dissect there, but even the simple language modification and qualification are pretty easy to spot.

Looking at the previous image and the sentence I highlighted in red, what do you see? I see sentence modification and qualification.

The sentence modifies a general rule (“the carrier looks new”) with an exception (“the inside will not look new”).

This type of language structure indicates the likelihood that this was written by a human who used the product, and it’s not beyond reason to think Google can spot this.

After all, there are currently an array of tools on the market that essentially do this to determine the likelihood that a piece of content was written by AI.

A Page On The Rebound: Reversal From Prior Product Review Update Demotion

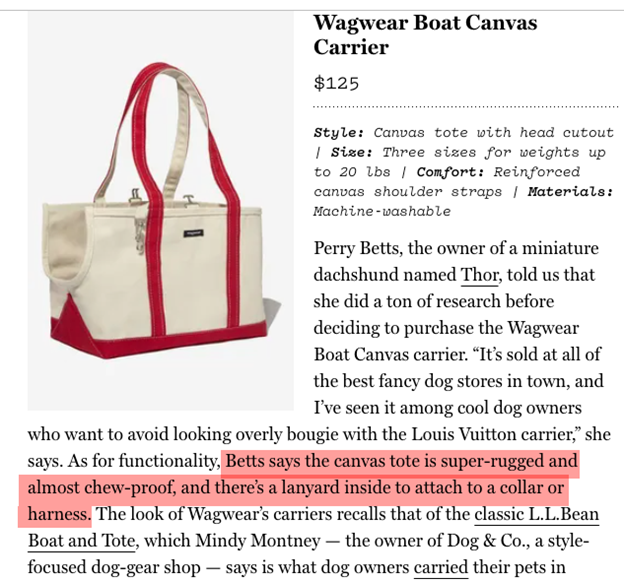

Let’s take a look at one last page from The Strategist that, prior to the update, was already ranking towards the top of the SERP but was rewarded further with the rollout.

The site has traditionally had an interesting strategy here. It has employed reviewers to look at the products and offer feedback, with the product review being a summary of what these reviews have submitted. In a way, it’s pretty brilliant on multiple levels.

However, over the past year, the page has not fared well on the SERP, with the February 2023 PRU being reflective of a slight restoration (or the beginning of a fuller rank restoration – only time will tell).

I believe a significant part of this demotion is about the level of product detail supported by the page.

When I looked back at the page in the Wayback Machine, the page was thin on useful product information.

Screenshot from The Strategist, March 2023

Screenshot from The Strategist, March 2023While the page, like the ones I have lauded here, uses actual experience, it’s not very informative. I don’t walk away knowing much about the product itself here.

Firsthand experience is not good in and of itself; it has to be helpful in the context of understanding the product per se.

What I think happened here is that the March 2022 PRU rewarded the page, but it should never have, and it was subsequently demoted with the July 2022 PRU.

Screenshot by author, March 2023

Screenshot by author, March 2023This makes the page a great place to see and learn how Google might be looking at content.

One thing to note in this instance is what I already mentioned; the write-up started to talk more about the product in greater detail.

Screenshot from The Strategist, March 2023

Screenshot from The Strategist, March 2023As you can see here, the page now offers a specific section that talks about the product’s specifications and beyond. In addition, the write-up references specific product points as well.

Again, it’s not enough to have personal experience; you have to connect that experience to the product meaningfully.

At the same time, the page, in certain instances, added multiple sellers for products, which, as I mentioned earlier, aligns with Google’s guidance.

Screenshot from The Strategist, March 2023

Screenshot from The Strategist, March 2023In addition to naming the contributing reviewer within the write-up, they also created a list of reviewers and influencers so we know who submitted their product experience in an easy-to-see fashion.

Screenshot from The Strategist, March 2023

Screenshot from The Strategist, March 2023That is a far cry from what the page did in prior versions, where all it did was quote anonymous sources.

Screenshot from The Strategist, March 2023

Screenshot from The Strategist, March 2023Now, the page is ready to benefit from the extreme amount of firsthand experience it presents.

Screenshot from The Strategist, March 2023

Screenshot from The Strategist, March 2023The page has the product details and the divulgence of its contributors, and it can now benefit, in my honest opinion, from the level of experience it offers.

It just shows that it’s never (or usually not) just one thing that moves the needle and makes the difference!

In Summary

My caveat is that I don’t feel comfortable stating a hard-and-fast rule, as there is an anecdotal nature to my analysis. However, there is a lot of evidence that pushes the notion I propose; namely, that Google can profile the language on the page in various ways to identify the likelihood that a product review represents actual product experience.

Whether it’s been in the analysis around other Google updates that I’ve put out there, or that SEO greats such as Glenn Gabe, Lily Ray, and Dr. Marie Haynes have published, we’ve seen Google do some significant things around understanding content and profiling for quality.

It’s logical to me that this process is active and continues to evolve with each resetting of whatever algorithm (be it the Product Review algorithm, the core algorithm, etc.).

Moreover, the ability of a machine learning property to profile language choice and structure is far from a pipe dream as, again, this is what these properties are designed to do.

Am I saying that you should stuff words like “we” and “our” into your product reviews? Absolutely not.

If you did, would you see a rank jump? Most likely not.

As I mentioned earlier, everything works together to create a “ranking soup.”

Like with the example from The Strategist, you can have a lot of “we,” “she,” and “our” interspersed within the content, but if the review doesn’t adequately address the product per se, is it worth Google ranking?

Lastly, it’s worth noting that sites are beginning to add multiple sellers to some of the products on the page and are using images that indicate firsthand experience.

At a minimum, what you can take away from all of this is that product review sites are kicking it up a notch.

They’re more closely adhering to Google’s guidance and using a language structure that speaks to the user and reflects firsthand product usage.

The landscape in this vertical seems to be slowly changing in a visible and tangible way.

More Resources:

Featured Image: Vector Stock Pro/Shutterstock