Google has announced several new ways to use artificial intelligence (AI) to enhance its search features.

AI has played a crucial role in Google’s search technology from the early days, improving the company’s language understanding capabilities.

With further investment in AI, Google has expanded its understanding of information to include images, video, and even real-world understanding.

Here’s how Google is utilizing this intelligence to improve the search experience.

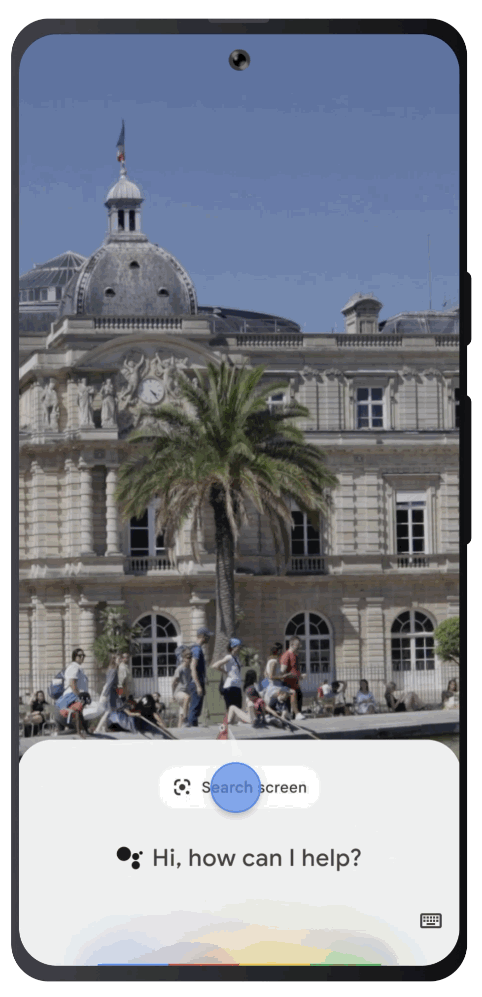

Search Your Screen With Google Lens

One new way Google uses AI is through its Lens feature.

Google Lens has become increasingly popular, with over 10 billion monthly searches.

With the new update, users can use Lens to search for information directly from their mobile screens.

This technology will be available globally on Android devices in the coming months.

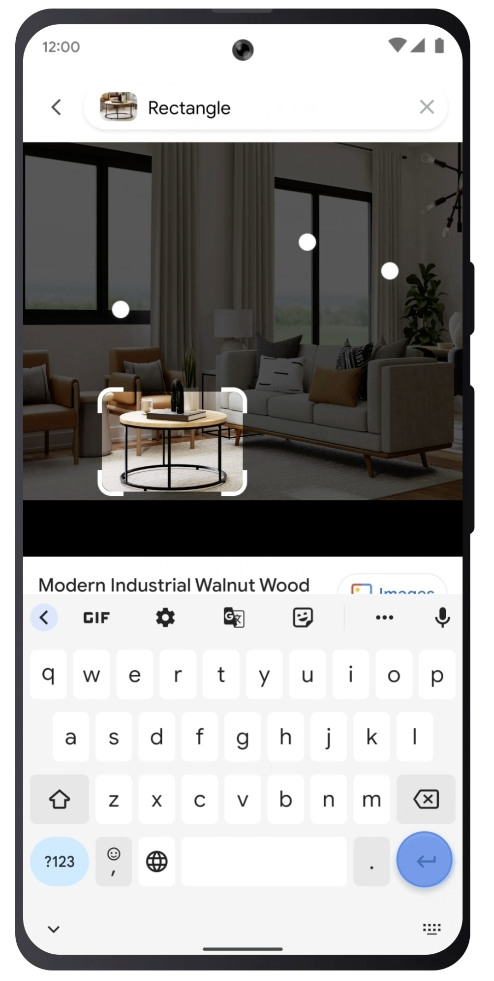

Multisearch

Another new feature, called multisearch, allows users to search with images and text simultaneously.

This feature is available globally on mobile devices in all languages and countries where Lens is available.

Now, Google is making it possible for people to use multisearch on any image they see in mobile search results.

For example, if a user is searching for “modern living room ideas” and sees a coffee table that they like but in the wrong shape, they can use multisearch to add text such as “rectangle” to find what they’re looking for.

Google has also added the ability to search locally, which allows users to find what they need in their vicinity.

This feature is currently available in English in the US and will expand globally soon.

Google is continuously working to make its search experiences more natural and visual. With the AI race heating up, it’s likely we can expect more from the search giant in the coming months.

Featured Image: monticello/Shutterstock