OpenAI, the AI research firm behind ChatGPT, has released a new tool to distinguish between AI-generated and human-generated text.

Even though it’s impossible to detect AI-written text with 100% accuracy, OpenAI believes its new tool can help to mitigate false claims that humans wrote AI-generated content.

In an announcement, OpenAI says its new AI Text Classifier can limit the ability to run automated misinformation campaigns, use AI tools for academic fraud, and impersonate humans with chatbots.

When tested on a set of English texts, the tool could correctly say if the text was written by AI 26% of the time. But it also wrongly thought that human-written text was written by AI 9% of the time.

OpenAI says its tool works better the longer the text is, which could be why it requires a minimum of 1,000 characters to run a test.

Other limitations of the new OpenAI Text Classifier include the following:

- Can mislabel both AI-generated and human-written text.

- AI-generated text can evade the classifier with minor edits.

- Can get things wrong with text written by children and on text not in English because it was primarily trained on English content written by adults.

With that in mind, let’s look at how it performs.

Using OpenAI’s AI Text Classifier

The AI Text Classifier from OpenAI is simple to use.

Log in, paste the text you want to test, and hit the submit button.

The tool will rate the likelihood that AI generated the text you submitted. Results range from the following:

- Very unlikely

- Unlikely

- Unclear if it is

- Possibly

- Likely

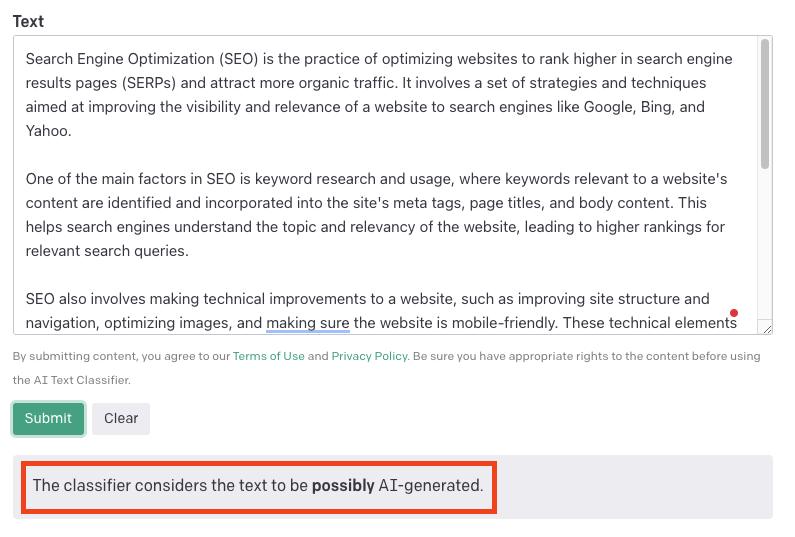

I tested it by asking ChatGPT to write an essay about SEO, then submitting the text verbatim to the AI Text Classifier.

It rated the ChatGPT-generated essay as possibly generated by AI, which is a strong but uncertain indicator.

Screenshot from: platform.openai.com/ai-text-classifier, January 2023

Screenshot from: platform.openai.com/ai-text-classifier, January 2023This result illustrates the tool’s limitations, as it couldn’t say with a high degree of certainty that the ChatGPT-generated text was written by AI.

By applying minor edits suggested by Grammarly, I reduced the rating from possibly to unclear.

OpenAI is correct in stating that it’s easy to evade the classifier. However, it’s not meant to be the only evidence that AI wrote something.

In a FAQ section at the bottom of the page, OpenAI states:

“Our intended use for the AI Text Classifier is to foster conversation about the distinction between human-written and AI-generated content. The results may help, but should not be the sole evidence when deciding whether a document was generated with AI. The model is trained on human-written text from a variety of sources, which may not be representative of all kinds of human-written text.”

OpenAI adds that the tool hasn’t been thoroughly tested to detect content containing a combination of AI and human-written text.

Ultimately, the AI Text Classifier can be a valuable resource for flagging potentially AI-generated text, but it shouldn’t be used as a definitive measure for making a verdict.

Featured Image: IB Photography/Shutterstock