Perplexity announced an update to its Copilot feature with GPT-3.5 Turbo fine-tuning from OpenAI and the introduction of Code Llama to Perplexity’s LLaMa Chat.

great use (and metrics) of the openai fine-tuning api: https://t.co/3n9IB3zitS

— Sam Altman (@sama) August 25, 2023

This means a more responsive and efficient AI-powered search experience without compromising quality.

For developers, it means access to Meta’s latest open-source large language model (LLM) for coding within 24 hours of its release.

Improving Speed And Accuracy With GPT-3.5 Turbo Fine-Tuning

According to Perplexity’s testing, the fine-tuned GPT-3.5 Turbo model is tied with the GPT-4-based model in human ranking.

Our fine-tuned GPT-3.5 model ties with the GPT-4-based model in human ranking on our task. This isn’t just about speed; it’s about delivering precise and accurate responses to your complex queries. Now you get the best of Perplexity at your finger tips. pic.twitter.com/jb86dZg91m

— Perplexity (@perplexity_ai) August 25, 2023

This could give Copilot users more confidence in the answers provided by Perplexity.

One of the notable improvements is the reduction in model latency by 4-5 times. This decreased the time it takes to deliver search results by almost 80%.

Faster response times could significantly improve user experience, especially for those who need almost instant answers to critical questions.

The transition to the fine-tuned GPT-3.5 model should also reduce inference costs – the computational expense of making predictions using a trained machine learning model.

The savings would allow Perplexity to invest in additional enhancements, ensuring that users continue to receive new features and better performance.

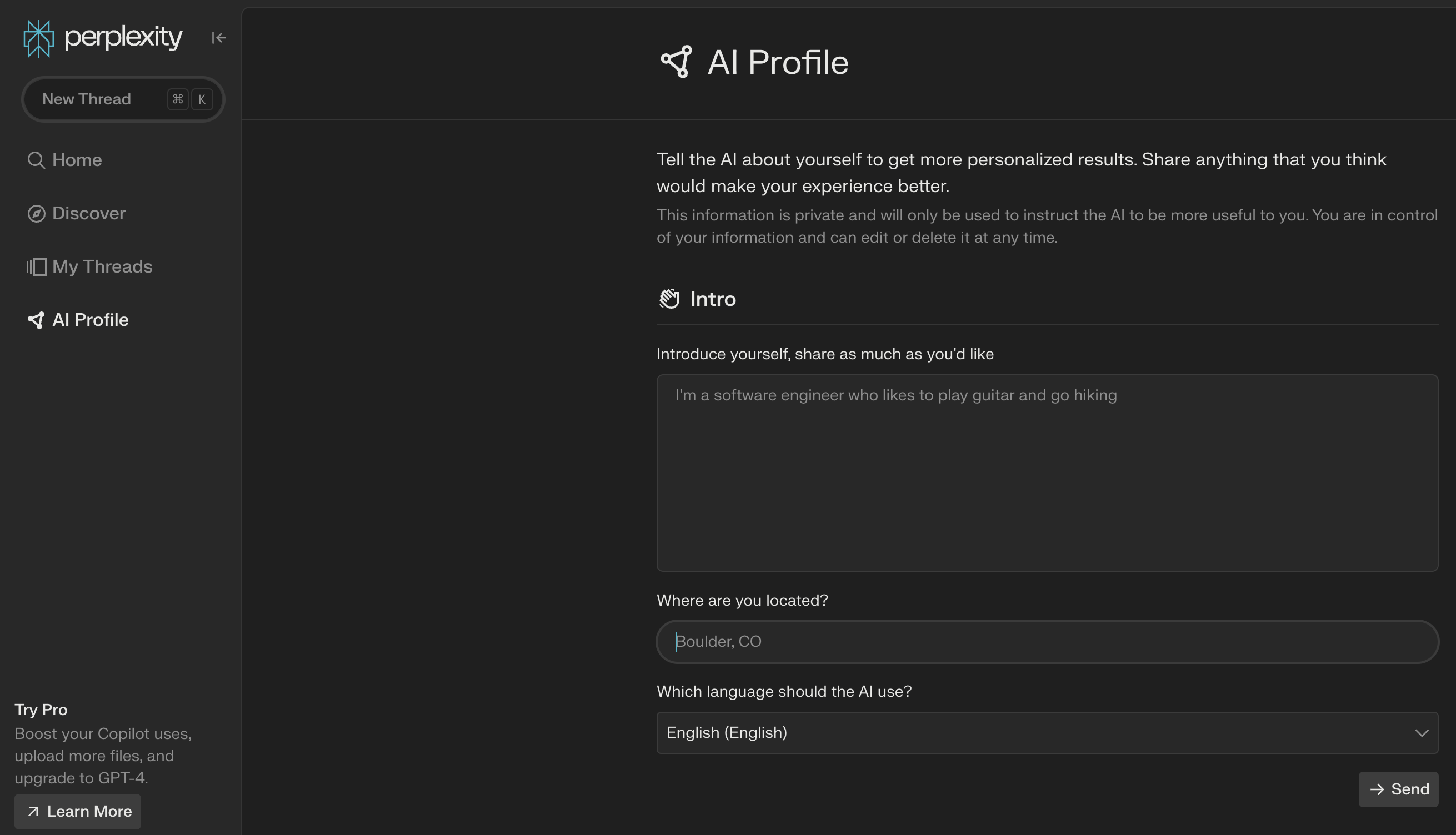

Perplexity users can also upload PDF files, focus search results on specific sources, and set up an AI profile to personalize search results.

Screenshot from Perplexity, August 2023

Screenshot from Perplexity, August 2023The AI profile is somewhat similar to the first portion of ChatGPT’s Custom Instructions.

Logged-in users can try Copilot free up to five times per hour. With a $20 monthly subscription, Perplexity Pro users can try Copilot 300 times per day with the option to switch to GPT-4.

Introducing Code Llama Intstruct To Perplexity Labs LlaMa Chat

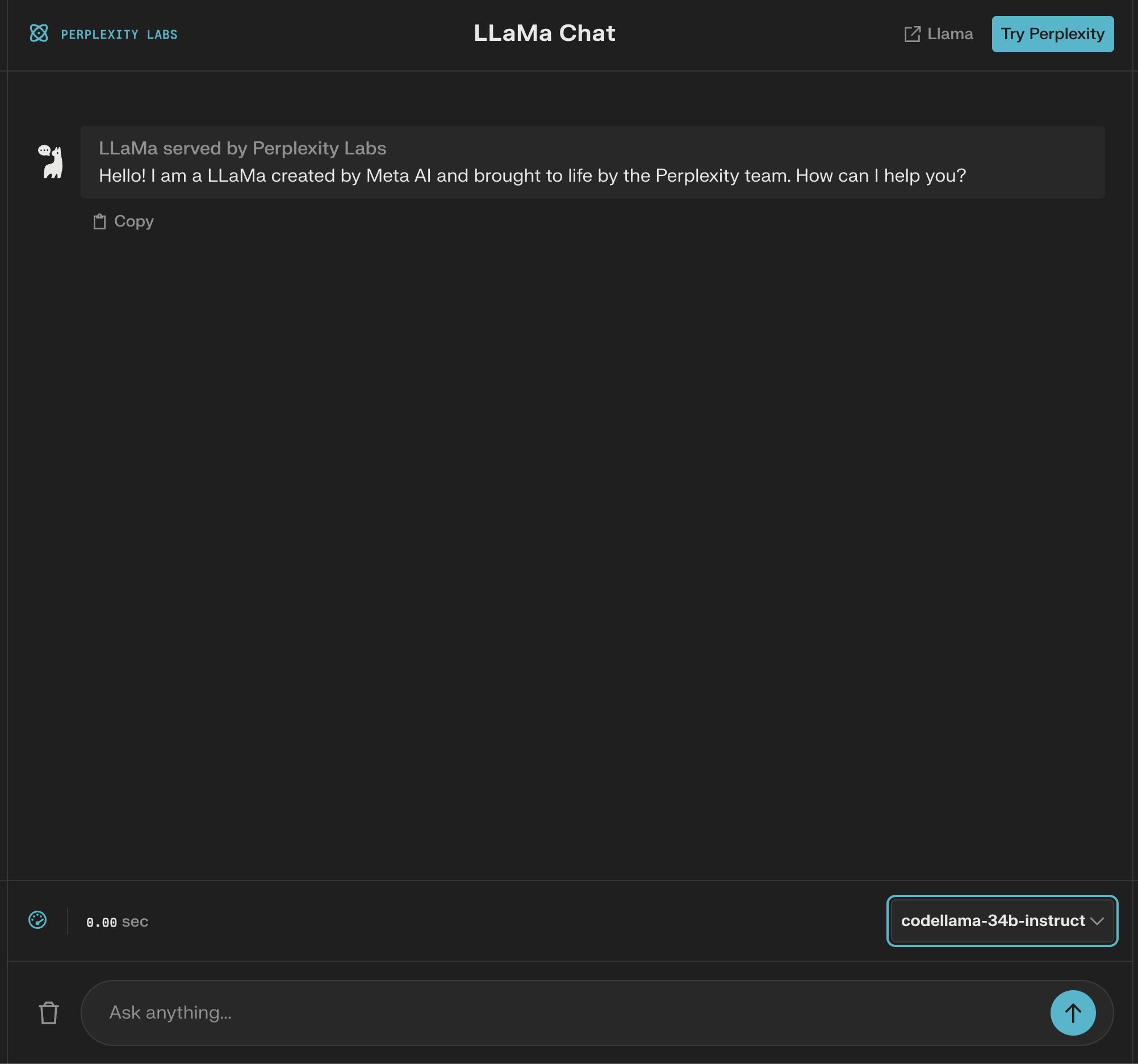

In addition to the updates to Perplexity’s AI-assisted search, Perplexity announced Code Llama in Perplexity Labs LlaMa Chat. Code Llama is a coding LLM from Meta AI integrated into Perplexity’s LlaMa Chat to improve answers to technical questions.

Code LLaMA is now on Perplexity’s LLaMa Chat!

Try asking it to write a function for you, or explain a code snippet: 🔗 https://t.co/gyiDw6u6IJ

This is the fastest way to try @MetaAI’s latest code-specialized LLM. With our model deployment expertise, we are able to provide you… pic.twitter.com/hX90QulMz4

— Perplexity (@perplexity_ai) August 24, 2023

Users can access the open-source LLM and ask it to write a function or clarify a section of code. The Instruct model of Code Llama is specifically tuned to understand natural language prompts.

The quick addition of Code Llama to Perplexity Labs LlaMa Chat will allow developers to test its usefulness almost immediately after Meta announced its availability.

Screenshot from Perplexity Labs, August 2023

Screenshot from Perplexity Labs, August 2023The Future Of AI Collaboration

The latest updates to Perplexity’s AI-powered search Copilot with a fine-tuned GPT-3.5 Turbo model and the introduction of Code Llama chat demonstrate how rapidly AI products improve when big tech companies collaborate.

Featured image: Poca Wander Stock/Shutterstock