The most recent Helpful Content Update (HCU) concluded with the Google March core update, which finished rolling out on April 19, 2024. The updates integrated the helpful content system into the core algorithm.

To investigate changes in Google’s ranking of webpages, data scientists at WLDM and ClickStream partnered with Surfer SEO, which pulled data based on our keyword lists.

Implications Of The March Update And Google’s Goals

Google is prioritizing content that offers exceptional value to humans, not machines.

Logically, the update should prioritize topic authority: Creators should demonstrate thorough experience, expertise, authoritativeness, and trustworthiness (E-E-A-T) on a given website page to assist users.

Your Money or Your Life (YMYL) pages should also be prioritized by HCU. When our health or money is at risk, we rely on accurate information.

Google’s Search Laison, Danny Sullivan, confirmed that HCU works on a page level, not just sitewide.

“This [HCU] update involves refining some of our core ranking systems to help us better understand if webpages are unhelpful, have a poor user experience, or feel like they were created for search engines instead of people. This could include sites created primarily to match very specific search queries.

We believe these updates will reduce the amount of low-quality content on Search and send more traffic to helpful and high-quality sites.”

Google also released the March 2024 spam update, finalized on March 20.

SEO Industry Impact

The update significantly affected many websites, causing search rankings to fluctuate and even reverse course during the update. Some SEO professionals have called it a “seismic shift” in the SEO industry.

Frustratingly, over the past few weeks, Google undermined the guidelines and algorithms central HCU system by releasing AI search results that include dangerous and incorrect health-related information.

“Google will do the Googling for you” #GoogleIO, May 14, 2024 pic.twitter.com/LgsPQiJd26

— Mukul Sharma (@stufflistings) May 14, 2024

There remains SERP volatility to date. It appears adjustments to the March update are still occurring now.

Background

Methodology

In December 2023, we analyzed the top 30 results on Google SERPs for 12,300 keywords. In April 2024, we expanded our study by examining 428,436 keywords and analyzing search results for 8,460. The study covered 253,800 final SERP results in 2024.

Our 2023 keyword set was more limited, providing a baseline for an expanded study. This allowed us to understand Google’s ranking signal changes after March and some of the “rank tremors” that occurred in early April.

We appended “how to use” to the front of keywords to create information-intent keywords for both data sets. JungleScout provided access to a database of ecommerce keywords grouped and siloed using NLP. Our study focused on specific product niches.

Correlation And Measurements

We used the Spearman correlation to measure the strength and direction of associations between ranked variables.

In SEO ranking studies, a .05 correlation is considered significant. With hundreds of ranking signals, each one impacts the ranking only slightly.

Our Focus Is On-Page Ranking Factors

Our study primarily analyzes on-page ranking signals. By chance, our 2024 study was scheduled for April, coinciding with the end of Google’s most significant ranking changes in over eight years. Data studies require extensive planning, including setting aside people and computing resources.

Our key metric for the study was comprehensive content coverage, which means thorough or holistic writing about the primary topic or keyword on a page. Each keyword was matched to text on the pages of the 30 top URLs in the SERP. We had highly precise measurements for scoring natural language processing-related topics used on pages.

Another key study goal was understanding webpages covering health-sensitive topics versus those in non-health pages. Would pages not falling into the now-infamous YMYL category be less sensitive to some ranking factors?

Since Google is looking for excellent user experience, data was pulled on each webpage’s speed and Core Web Vitals in real-time to see if Google considers it a key component of the user experience.

Content Score As A Predictor

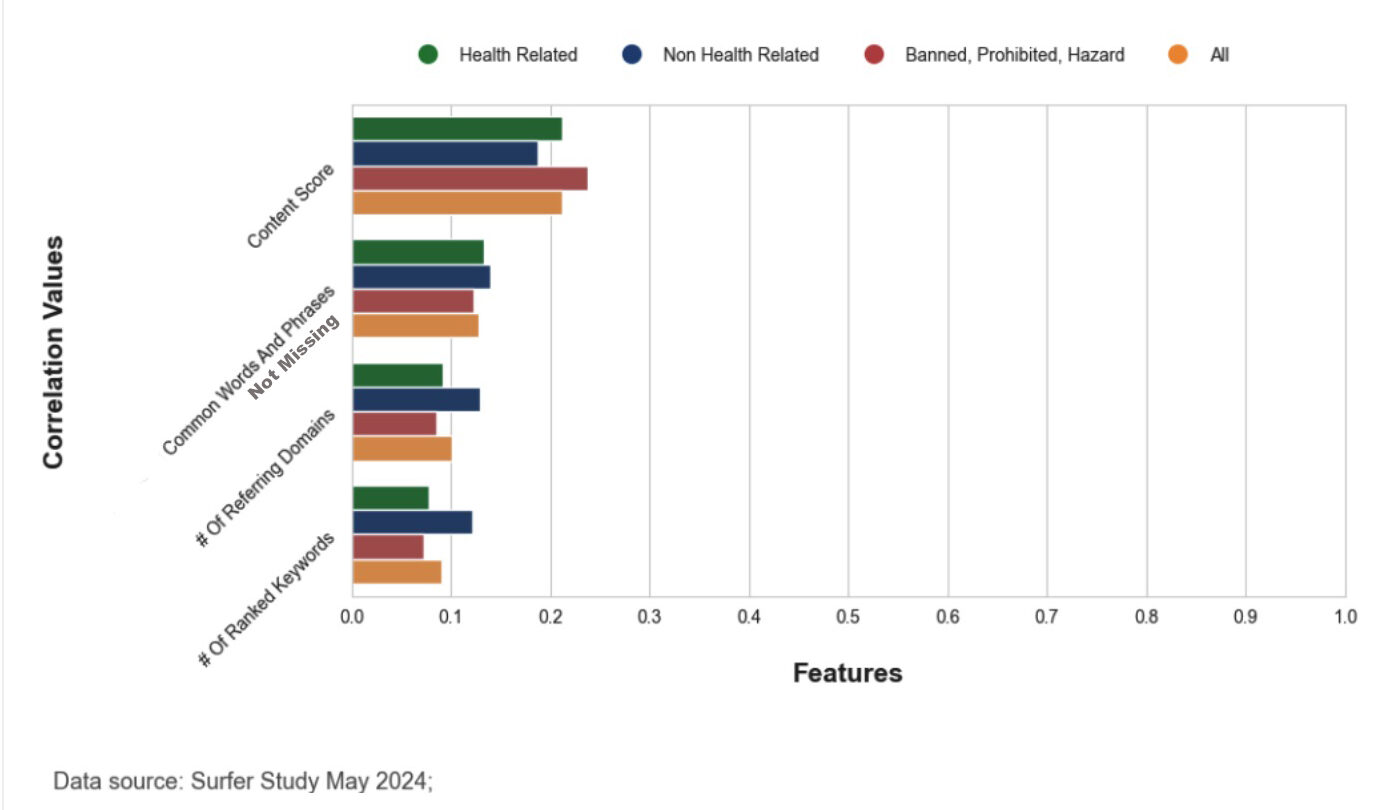

It’s not surprising that Surfer SEO’s proprietary “Content Score” was the best predictor of high ranking compared to any single on-page factor we examined in our study. This is true for 2023, where the correlation was .18, and 2024, which is .21.

The score is an amalgamation of many ranking factors. Clearly, the scoring system shows helpful content that’s meaningful for users. The small correlation change from the two periods shows the March update did not change many key on-page signals.

The Content Score consists of many factors, including:

- Usage of relevant words and phrases.

- Title and your H1.

- Headers and paragraph structure.

- Content length.

- Image occurrences.

- Hidden content (i.e., alt text of the images).

- Main and partial keywords – not only how often but where exactly those are used.

… and many more good SEO practices.

More About Correlations And Measurements In The Study

Niches were chosen because we wanted domains with multiple URLs to appear in our study. It was important to get many niche and “specialty” oriented sites, as is the case for most non-mega sites.

Most data studies overlook how a group of URLs from one domain tells a story: The keywords they use are so randomized that the mega websites have the vast majority of URLs in results.

The narrow topics also meant fewer keywords with extreme ranking competition. Many ranking studies use a preponderance of keywords with over 40,000 monthly searches, but most SEO professionals don’t work for websites that can rank in the top 10 for those. This study is biased toward less competitive keywords, and we didn’t look at Google keyword search volume – just the volume on Amazon.

Our keywords had more than 10 monthly searches on Amazon per month (via JungleScout). However, when appending “how to use” to the front of the keyword, the search volume in Google would be less than 10 a month in many cases.

The “dangerous, prohibited, banned” group was excluded from most comparisons of health vs. non-health. Many of these were very esoteric topics or Amazon needed six to 10 words to describe them.

Most SEO professionals don’t work for the top 50 largest websites. Instead, we want results that help the vast majority of SEO pros.

Here’s How We Generated Different Keyword Types

For example, we appended “buy” to the product keyword “adobe professional” in one instance and “how to use” in another.

| Product | Category | Search Intent | Appended | Keyword |

| adobe professional | software | informational | how to use | how to use adobe professional |

We examined data using the Spearman rank-order correlation formula. Spearman calculates the correlation between two variables, and the correlation is measured from -1 to 1. A correlation coefficient of 1 or -1 would mean that there is a strong monotonic relationship between the two variables.

The Spearman correlation is used instead of Pearson because of the nature of Google search results; they are ranked by importance in decreasing order.

Spearman’s correlation compares the ranks of two datasets, which fits our goal better than Pearson’s. We used .05 as our level of correlation confidence.

When we show a correlation of .08, it suggests a ranking signal that is twice as powerful as another ranking signal measure of .04. Greater than .05 is a positive correlation; less than .05 is no correlation. Correlations range from .05 to -.05. A negative correlation shows that it is causing the direct variable number to go down.

Many of the domains in the study are from outlier or niche topics or are small because little time and money is spent on them. That is, first and foremost, why they don’t rank well.

That is also why we must look for “controls” that might show that two domains have the same amount of time, web development/design superiority, and money invested in them, but they are, for example, health vs. non-health topics.

Correlation is not causation. We did want to understand how we could “control” some large factors to better pinpoint the effect of results. This was done with graph visualizations.

Google uses potentially thousands of factors, so isolating independent variables is very difficult. Correlations have been used in science for centuries, where variables can’t be totally controlled. They are accepted science, and to say otherwise is a fool’s errand.

Keyword Categories And Classifications

Our keywords were search terms related to products.

Using narrow niches lets us cluster topics that are very much not YMYL vs. those that are.

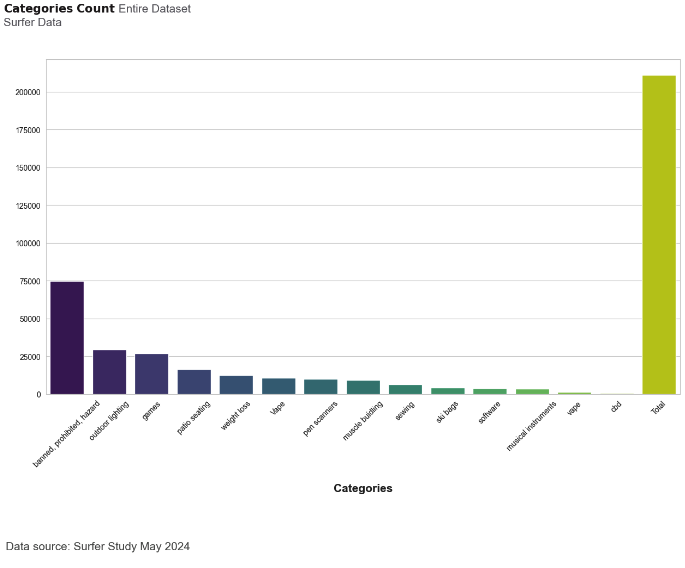

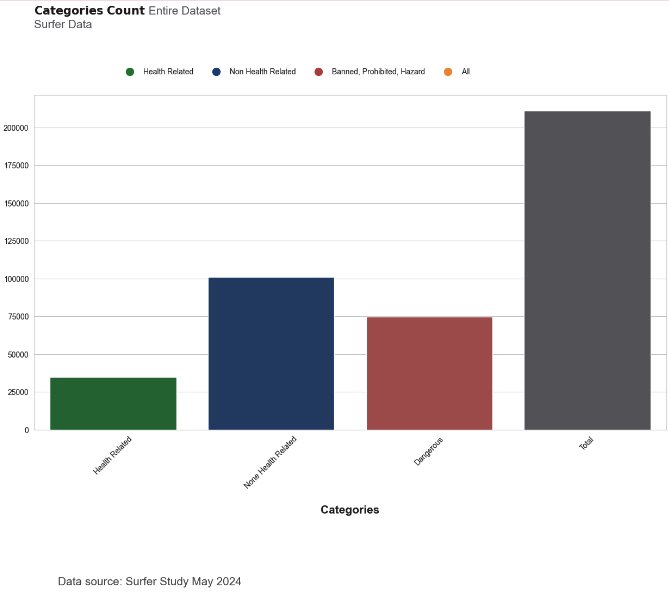

Image from author, June 2024

Image from author, June 2024For example, CBD and vape keywords are banned from Google Ads, so they are very good for our health-related keyword set. The FDA and others consider muscle building and weight loss two of the riskiest (read: dangerous) health-related categories on Amazon.

We chose the other non-health categories because they were near-poster children of innocuous niches.

The “dangerous, prohibited, banned” keywords come from products that are manually removed from Amazon’s Seller Central page list.

Each category fits into one of three classifications (The X axis here is a number of keywords).

Image from author, June 2024

Image from author, June 2024Detailed Findings And Actionable Insights

Importance Of Topic Authority And Semantic SEO

The largest on-page ranking factor is the use of topics related to the searched keyword phrase (our measure of topic authority and semantic SEO).

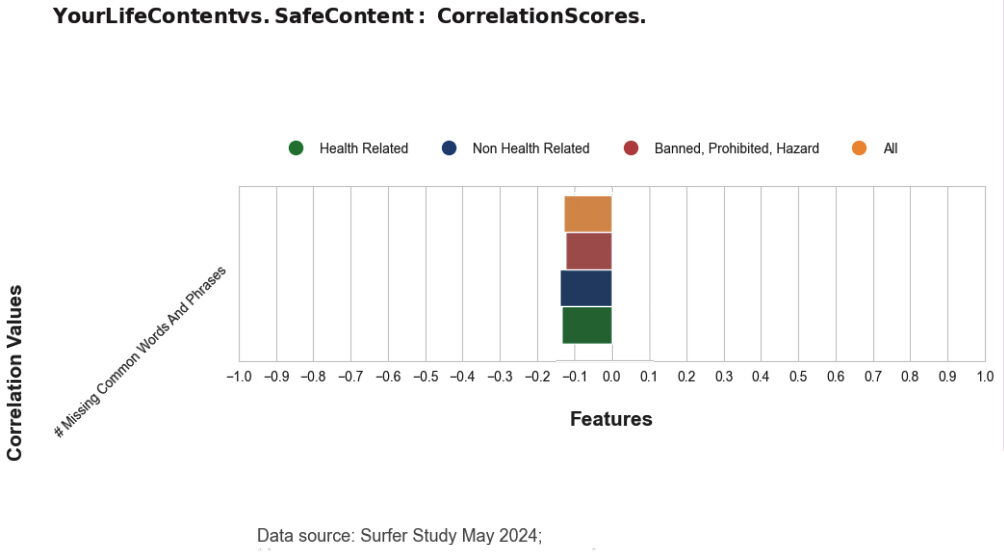

We found a correlation of -.11 in December 2023, which increased to -.13 in April 2024 for “missing common keywords and phrases.” These numbers are calculated by examining the relationship between the metric and a site’s Google ranking.

A higher negative correlation, like -.13, signifies that omitting these keywords significantly decreases the site’s ranking.

Image from author, June 2024

Image from author, June 2024Surfer SEO’s algorithm typically reveals 10-100 words and phrases that should be included to cover the topic comprehensively.

That factor is so strong that it is more important than the domain monthly traffic volume for the domain a webpage is on (for example, articles on Amazon.com rank higher than those published on small websites).

A domain’s traffic is a measure of authority (and, perhaps, trust to some extent). Domain rating or Domain authority, metrics calculated by Ahrefs and Moz, are other ways to measure a website’s ability to rank highly in the SERP. However, they rely much more on links, an off-page ranking factor.

This is a novel finding. We’ve never seen any large Google ranking study demonstrate such high importance of topical authority. Concurrently, none used such highly precise on-page data examining text with thousands of search result pages.

If you’re not paying attention to natural language processing, a.k.a topic modeling known as semantic SEO, you’re almost nine years late. That’s when the Hummingbird algorithm launched. Six years later, the sub-algorithm of Hummingbird appeared: BERT.

The BERT algorithm is a neural machine translation system developed by Google that performs word-level training and uses a bidirectional LSTM with attention to learning representations of words. It’s particularly important in helping Google understand the meaning of users’ queries.

Health-Related Vs. Non-Health Pages

We found that Google’s algorithms increase their sensitivity to on-page factors when returning results about health-sensitive topics. To rank highly in Google, YMYL pages need more comprehensive topic coverage. Since the March update, this has become more important than in December.

Image from author, June 2024

Image from author, June 2024Generally, YMYL search results prioritize content from government sites, established financial companies, research hospitals, and very large news organizations. Sites like Forbes, NIH, and official government pages often rank highly in these areas to ensure users receive reliable and accurate information.

More About The Massive March Update And YMYL

Websites in YMYL started getting slews of attention and traction in the SEO community in 2018 when Google rolled out the “Medic Update.” Health and finance categories have seen a rollercoaster ride in the SERPs over the years since then.

One way of understanding the changes is that Google tries to be more cautious in ranking pages related to personal health and finances. This might be especially true when topics lack broad consensus, are controversial, or have an outsized impact on personal health and finance choices.

Most SEO pros agree that there is no YMYL ranking factor per se. Instead, websites in these sectors have E-E-A-T signals that are examined with far higher demands and expectations.

When we look at on-page ranking signals, many other factors interfere with what we are trying to measure. For example, in link studies, SEO pros would love to isolate how different types of anchor texts perform. Unless you own over 500 websites, you don’t have enough control over what affects minor differences among anchor text variables.

Nevertheless, we find differences in correlations between health vs. non-health ranking signals in both of our studies.

The “banned, hazardous, prohibited” pages were even more sensitive to one page’s optimization than the non-health-related group.

Since the Content Score we used amalgamates many factors, it is especially good at showing the differences. Isolating for a small factor like “body missing/having common words” (topic coverage) is too weak a signal in itself to show a pronounced difference between two types of content pages.

The number of domain-ranked keywords and the website’s (domain’s) estimated monthly traffic affect how a page ranks – a lot.

These measure domain authority. Google doesn’t use its own results (organic search traffic) as a ranking factor, but it’s one of the most useful stats for understanding how successful a site is with organic search.

Most SEO pros evaluate via scores like DA (Moz) or DR (Ahrefs), which are much more heavy on link profiles and less on actual traffic driven via organic search.

Ranked keywords and estimated traffic are critical ways to find E-E-A-T for a domain. They show the website’s success but not the page’s. Looking at these external ranking factors on a page level would give more insights, but it is important to remember that this study focuses on on-page factors.

Ranked keywords had a strong relationship, with correlations of .11 for 2023 and .09 for 2024. For traffic estimations, we saw .12 (2023) and .11 (2024).

Having a page on a larger website predicts higher rankings. One of the first things SEO pros learn is to avoid going after parent topics and competitive keywords where authority sites dominate the SERPs.

Five years ago, when most SEO practitioners weren’t paying attention to topic coverage, the best way to create keyword maps or plans was using the “if they can rank, we can rank” technique.

This strategy is still important when used alongside topic modeling, as it relies heavily on being certain that competitor sites analyzed have similar authority and trust.

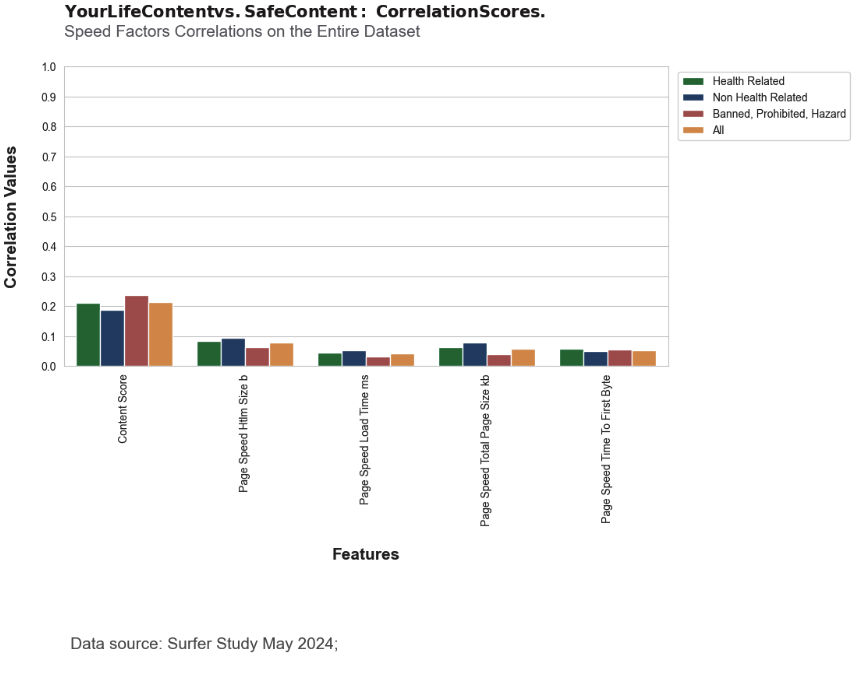

Website Speed And High-Ranking Pages

Google created a lot of hoopla when it announced:

“Page experience signals [will] be included in Google Search ranking. These signals measure how users perceive the experience of interacting with a webpage and contribute to our ongoing work to ensure people get the most helpful and enjoyable experiences from the web…the page experience signals in ranking will roll out in May 2021.”

We looked at four site speed factors. These are:

- HTML size (in bytes).

- Page speed time to first byte.

- Load time in milliseconds.

- Page size in kilobytes

In our 2023 study, we did not find a correlation with the page speed measurements. That was surprising. Many website owners placed too much emphasis on them last year. The highest correlation was just .03 for both time to first byte and HTML file size.

However, we saw a significant jump since the March update. This matches squarely with Google’s statement that user experience is its priority for Helpful Content. Time to first byte is the most important factor, as it was five years ago. HTML file size was the second speed factor that mattered most.

April 2024 Speed correlations (Image from author, June 2024)

April 2024 Speed correlations (Image from author, June 2024)In 2016, I oversaw the first study to show Google measures page speed factors other than time to first byte. Since then, others have also found even bigger effects on higher ranking by having fast sites in other areas like “Time to First Paint” or “Time to First Interactive.” However, that was before 2023.

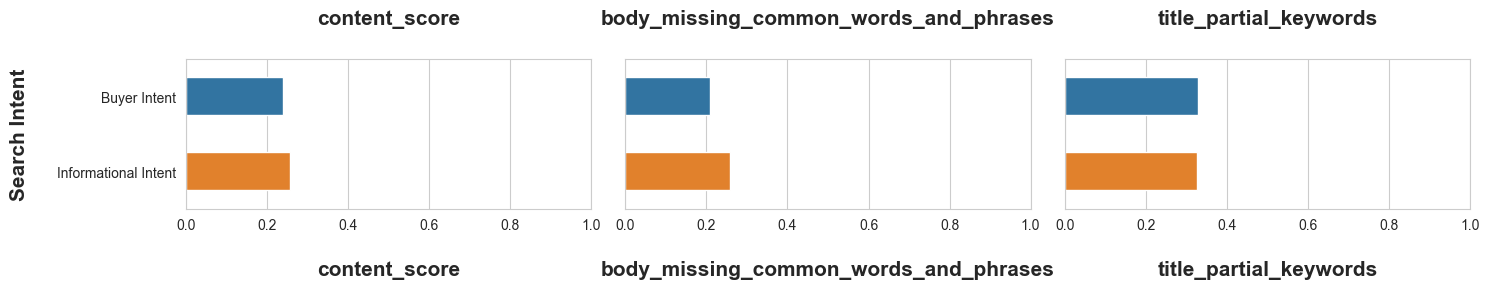

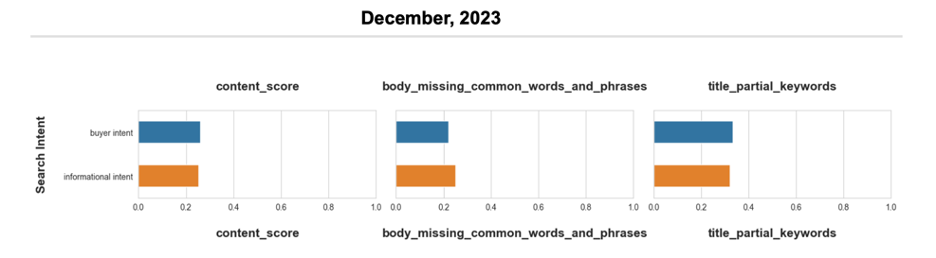

Informational Vs. Buy Intent Content

Different search intents require different approaches.

Content must be better optimized for informational searches compared to buyer intent searches.

We created two groups for user intent query types. This is another test we’ve not seen done with a big data set.

Image from author, June 2024

Image from author, June 2024For buyer intent, “for sale” was appended to the end of search terms and “buy” to the front of other terms. This was implemented randomly on half of all keywords in the study. The other half had “how to use” appended to the beginning.

Since there are so many impacts on rank, these differences – if there even are any – get a bit lost. We did see a small difference where informational pages, which tend to have more comprehensive topic coverage, are slightly more sensitive when they are missing related keywords.

Our hypothesis was ecommerce pages are not expected to be as holistic in word coverage. They have authority from user reviews and unique images not found elsewhere. An informational page has less to prove its authoritativeness and trustworthiness, as the writing is more critical.

Prior to the March update, we saw a more pronounced difference.

Image from author, June 2024

Image from author, June 2024Google knows users don’t want to see too much text on an ecommerce page. If they are ready to buy, they’ve typically done some due diligence on what to buy and have completed most of their customer journey.

Ecommerce sites use more complex frameworks, and Google can tell much about buyer user experience with technical SEO page factors that are less important on informational pages.

In addition, for sites with more than a handful of products, category pages tend to have the more thorough content that users and Google look for before diving deeper.

Challenges And Considerations

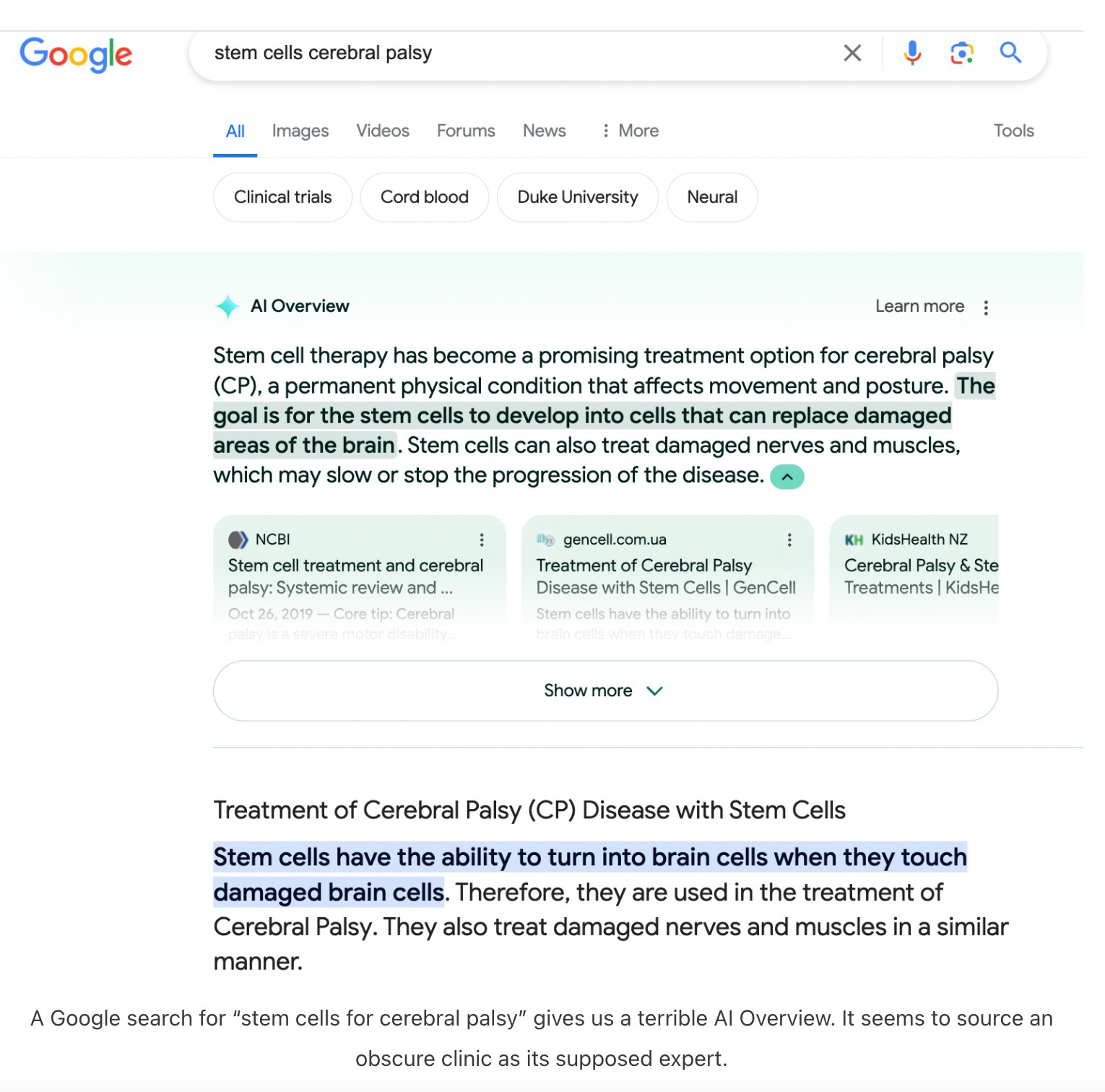

Google is under intense scrutiny because of its AI search results that give incorrect, dangerous answers to health questions. Google lowered the number of YMYL responses that trigger AI results, but it has left a double standard in place: websites appearing in Search must have content from personal experience, expertise, etc. Yet Google’s AI overviews come from scraping content to generate answers via large language models known to make mistakes (hallucinations).

There was outrage over answers to uncommon searches that produced ridiculous results for health-related questions (for example, suggesting users use glue with their pizza). In our view, the bigger issue is that AI results don’t use the same tough standards the search giant expects of website owners.

For example, a search for “stem cells cerebral palsy” in late May produced an AI overview that sources an “obscure clinic as its supposed expert”

Screenshot from search for [stem cells cerebral palsy], June 2024

Screenshot from search for [stem cells cerebral palsy], June 2024Potential For Over-Optimization

An interesting consideration posed by HCU is whether having too many of the same entities and topics as the existing top results for the same topic is considered “creating for search engines.”

There’s no way to answer that with a correlation study, but Google likely looks for subtle clues of overoptimization. Its use of machine learning suggests it examines pages for such clues, including related topics.

Keyword “stuffing” stopped being a valid SEO tactic. Perhaps “topic stuffing” might someday become a no-no. We didn’t measure that, but if having fewer related words and phrases hurts ranking, it seems this is not an issue now.

Recommendations Based On Findings

Enhance Topic Coverage And Comprehensive Content

To achieve high rankings, ensure your content is thorough and covers topics extensively. This is often referred to as “semantic SEO.”

By focusing on related topics, you can create content that addresses the primary subject and covers related subtopics, making it more valuable to readers and search engines alike.

Actionable Tips:

- Research Related Topics: Use tools like SurferSEO.com, Frase.io, AnswerThePublic.com, Ahrefs.com, or Google’s Keyword Planner to identify related topics that complement your main content. Look for questions people are asking about your main topic and address those within your content.

- Create Detailed Content Outlines: Develop comprehensive outlines for your articles, including primary and secondary topics. This ensures your content covers the subject matter in depth and addresses related subtopics.

- Use Topic Clusters: Consider organizing your content into clusters, where a central “pillar” page covers the main topic broadly and links to “cluster” pages that dive deeper into related subtopics. This helps search engines understand the breadth and depth of your content.

- Incorporate User Intent: Understand the different intents behind search queries related to your topic (informational, navigational, transactional) and create content that satisfies these intents. This could include how-to guides, detailed explanations, product reviews, and more.

- Update Regularly: Keep your content fresh by regularly updating it with new information, trends, and insights. This shows search engines that your content is current and relevant.

Meet Higher Standards Of E-E-A-T For Health-Related Content

If your website covers health or finance-related topics, it’s crucial to meet the high standards of expertise, authoritativeness, trustworthiness, and experience (E-E-A-T). This ensures your content is reliable and credible, which is essential for user trust and search engine rankings.

Actionable Tips:

- Collaborate with qualified healthcare professionals to create and review your content.

- Include clear author bios that highlight their credentials and expertise in the field.

- Cite reputable sources and provide references to studies or official guidelines.

- Regularly review and update your health content to ensure it remains accurate and current.

- Build links and ensure you’re getting brand mentions off-site. Our study didn’t focus on this, but it’s critical.

Improve Website Speed And User Experience

Website speed and user experience are increasingly important for SEO. To enhance load times and overall user satisfaction, focus on improving the “time to first byte” (TTFB) and minimizing the HTML file size of your pages.

Actionable Tips:

- Optimize your server response time to improve TTFB. This might involve upgrading your hosting plan or optimizing your server settings.

- Minimize page size by compressing images, reducing unnecessary code, and leveraging browser caching.

- Use tools like Google PageSpeed Insights to identify and fix performance issues.

- Ensure your website is mobile-friendly, as most traffic comes from mobile devices.

Future Research

We tried to compare the top 15% of large websites to the lower 85% to see if they benefited more from the March update. There was no meaningful change.

However, slews of small publishers spoke up about the update’s outsized impact on them. We wish we had more time to examine this area. It’s important to understand how Google dramatically changed the landscape of Search.

Further studies are needed to understand the impact of semantic SEO and user intent on rankings. Google is looking at this as a site-wide signal, so the SEO community can learn a lot from a study that looks at entity and topic coverage site-wide.

Other site-wide studies with big data sets are also absent in SEO studies. Can we measure site architecture across 1,000 websites to find other best practices for Google rewards?

Additional Notes And Footnotes

Editor’s Note: Search Engine Journal, ClickStream, and WLDM are not affiliated with Surfer SEO and did not receive compensation from it for this study.

All Metrics Measured And Analyzed In Our Study

| Metric | Description |

| For Domain Estimated Traffic | Surfer SEO’s estimation based on search volumes, ranked keywords, and positions. |

| For Domain Referring Domains | Number of unique domains linking to a domain, a bit outdated. |

| URL Domain Partial Keywords | Number of partial keywords in the domain name. |

| Title Exact Keywords | Number of exact keywords in the title. |

| Body Words | Word count. |

| Body Partial Keywords | Number of partial keywords in the body (exact keywords variations, a word matches if it starts with the same three letters). |

| Links Unique Internal | How many links are on the page pointing to the same domain (internal outgoing links). |

| Links Unique External | How many links are on the page pointing to other domains (external outgoing links). |

| Page Speed HTML Size (B) | HTML size in bytes. |

| Page Speed Load Time (ms) | Load time in milliseconds. |

| Page Speed Total Page Size (KB) | Page size in kilobytes. |

| Structured Data Total Structured Data Types | How many schema markup types are embedded on the page, e.g., local business, organization = 2. |

| Images Number of Elements | Number of images. |

| Images Number of Elements Outside Links Toggle Off | Number of images, including clickable images like banners or ads. |

| Body Number of Words in Hidden Elements | Number of words hidden (e.g., display none). |

| Above the Fold Words | Number of words visible within the first 700 pixels. |

| Above the Fold Exact Keywords | Number of exact keywords visible within the first 700 pixels. |

| Above the Fold Partial Keywords | Number of partial keywords visible within the first 700 pixels. |

| Body Exact Keywords | Number of exact keywords used in the body. |

| Meta Description Exact Keywords | Number of exact keywords used in the meta description. |

| URL Path Exact Keywords | Number of exact keywords within the URL. |

| URL Domain Exact Keywords | Number of exact keywords within the domain name. |

| URL Path Partial Keywords | Number of partial keywords within the URL. |

More resources:

Featured Image: 7rainbow/Shutterstock