Now that RSAs (responsive search ads) have replaced ETAs (expanded text ads) in Google Ads, it may be time to rethink your strategies for optimizing ads.

Optimizing RSAs takes a whole different approach than what most advertisers have been doing for years.

While you’ll still want to use a similar methodology to decide what text variations to test, the way you go about doing a test that leads to statistically significant results has changed.

RSA Testing Is Different From ETA Testing

Ad testing used to consist of A/B experiments where multiple ad variations competed against each other.

After accruing enough data for each of the contending ads, a winner could be picked by analyzing the right metrics.

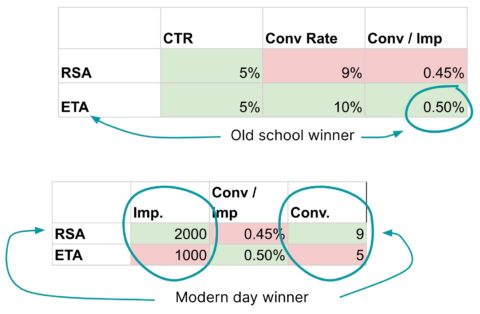

A popular metric to determine the winning ad used a combination of conversion rate and clickthrough rate to calculate “conversions per impression” (conv/imp).

The ad with the best rate could be declared the winner after enough data had accrued to allow for statistical significance.

This technique for finding winning ads no longer works for three reasons.

Let’s take a look at what these are.

Reason 1: You Can Only Test 3 RSAs Per Ad Group

In the days of ETA ad testing, advertisers could expand their A/B test into an A/B/C/D/… test and keep adding more challengers to their experiment until they reached the limit of 50 ads per ad group.

While I never met an advertiser who ran 50 concurrent ads in an experiment, I’ve seen many who tested five or six at a time.

But Google now caps ad groups at a maximum of three RSAs so that already changes the way things have to work in ad testing.

Reason 2: You Don’t Get Full Metrics For Ad Combinations

Remember that each RSA can have up to 15 headlines and up to 4 descriptions, so even a single RSA can now be responsible for generating 43,680 variations.

That’s far more than the 50 variations of ETAs we were allowed to test in the past.

So when a user sees an RSA, only a subset of the headlines and descriptions submitted by the advertiser are actually shown in the ad.

What’s more, which specific headlines and descriptions are shown changes from auction to auction.

When comparing the performance of two RSAs to one another, you’re really comparing the performance of 43,680 possibilities of ad A to 43,680 possibilities of ad B.

That means that even if you find ad A to be the winner, there are a lot of uncontrolled variables in your experiment that invalidate any results you may find.

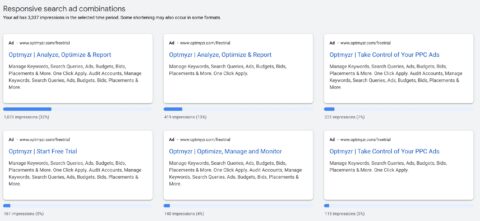

Ad combinations reports in Google Ads show which combinations of RSA assets are shown as ads. Screenshot by author, 2022.

Ad combinations reports in Google Ads show which combinations of RSA assets are shown as ads. Screenshot by author, 2022.To get more useful data, you’d have to look at the combinations report which shows exactly which headlines and descriptions were combined for each ad.

But the problem with this data is that Google only shares the number of impressions.

And to calculate the winning ad, we need to know about CTR and conversion rate, both metrics that we no longer get from Google at this level of granularity.

Reason 3: Ad Group Impressions Now Depend As Much On The Ad As The Keywords

But maybe the most surprising element of why ad testing methodology needs to evolve is that the old methods were built in a world that assumed impressions only depended on the keywords of an ad group.

RSAs have challenged this assumption and now the impressions of an ad group can depend as much on the ads as the keywords.

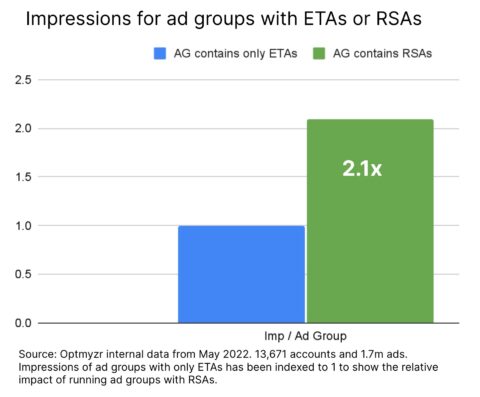

In Optmyzr’s May 2022 RSA study, we found that ad groups with RSAs got 2.1 times as many impressions as those with only ETAs.

Image from Optmyzr, June 2022

Image from Optmyzr, June 2022And regardless of whether this dramatic increase in impressions for ad groups with RSAs is due to improved ad rank and quality score, or whether it’s because Google has built in a preference for this ad type, the end result is the same.

The sandbox in which we play prefers RSAs, especially those that contain the maximum number of assets and that use pinning as sparingly as possible.

So when we do modern ad optimization, we must consider not only conversions per impression but also the number of impressions each ad can deliver.

-

Modern ad testing needs to account for differences in impressions.

Modern ad testing needs to account for differences in impressions.

A/B Asset Testing With Ad Variations

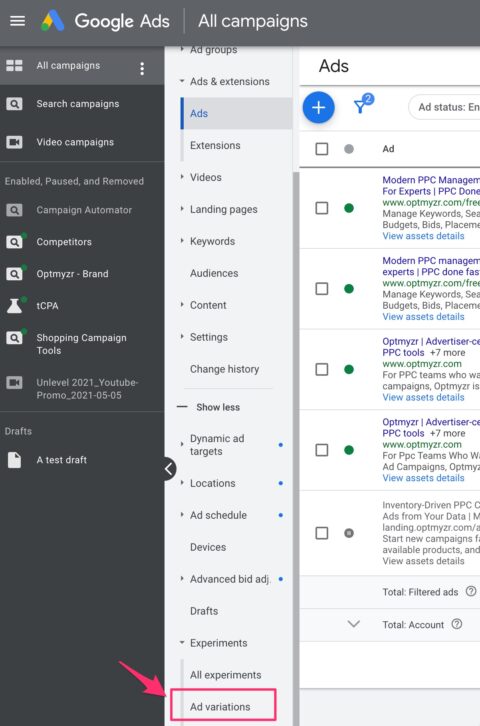

Fortunately, Google has considered the problems RSAs introduced for ad testing and has made updates to Ad Variations, a subset of their Experiment tools, to optimize ads.

Rather than requiring the creation of multiple RSAs, the experiments operate on assets and allow advertisers to test three types of things: pinning assets, swapping assets, and adding assets.

You’ll find all the options in the left-side menu for Experiments.

-

Look for Ad Variations in the Experiments menu of Google Ads.

Look for Ad Variations in the Experiments menu of Google Ads.

Test Pinning

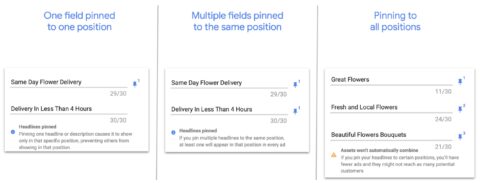

Pinning is a way to tell Google which pieces of text should always be shown in certain parts of the ad.

The simplest form of pinning tells Google to show one specific piece of content in a specific location. A common use is to always show the brand in headline 1.

-

Image from Google Ads, June 2022

Image from Google Ads, June 2022

A more advanced implementation is to pin multiple pieces of text to a specific location.

Of course, the ad can only show one of the pinned texts at any time so it’s a way to balance advertiser control with the benefits of dynamically generated ads.

A common use would be to test three variations of a brand message by pinning all three variations to headline position 1.

The most extreme form of pinning is to create what some have called a “fake ETA” by pinning text to every position of the RSA. Google recommends against this because it defeats the purpose of RSAs.

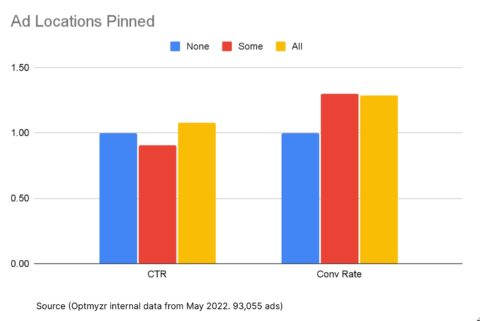

In Optmyzr’s RSA study, we also found that this type of pinning can dramatically reduce the number of impressions the ad group can get.

But somewhat to our surprise we also found that fake ETAs have higher CTR and higher conversion rates than pure RSAs.

One theory is that advertisers who’ve spent years perfecting their ads using ETA optimization techniques already have such great ads that machine learning may have little to offer in terms of upside.

Image from Optmyzr, June 2022

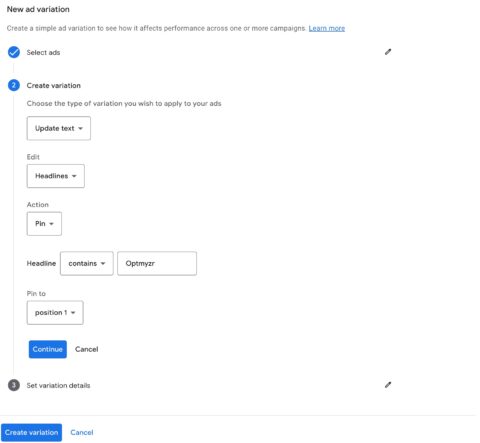

Image from Optmyzr, June 2022To start an ad test with pinning, look for the Ad Variation option to update text and then choose the action to pin.

-

Image from Google Ads, June 2022

Image from Google Ads, June 2022

You can then build rules for which headlines and descriptions to pin to a variety of locations.

For example, you could say that any headline that contains your brand name should be pinned to headline position 1.

One limitation is that you cannot create an ad variation experiment that tests pinning for multiple locations at the same time.

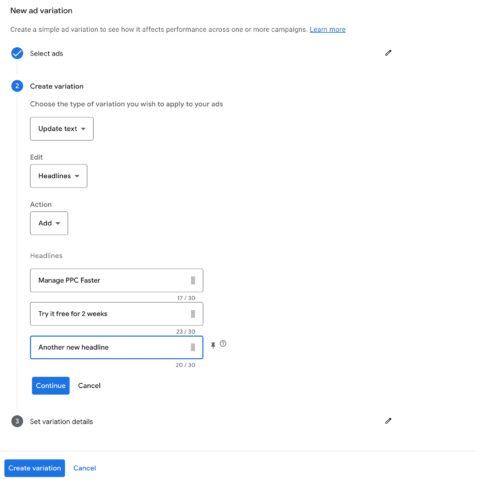

Test Adding Assets

Another experiment available with Ad Variations is to test what would happen if certain assets were added or removed.

This type of test is well suited to testing bigger changes, for example, to see what would happen if you included a special offer, a different unique value proposition, or a different call to action.

-

Image from Google Ads, June 2022

Image from Google Ads, June 2022

You can also use this to test the impact of Ad Customizers on your performance.

Some ad customizers available in RSAs are location insertion, countdowns, and business data.

Test Replacing Assets

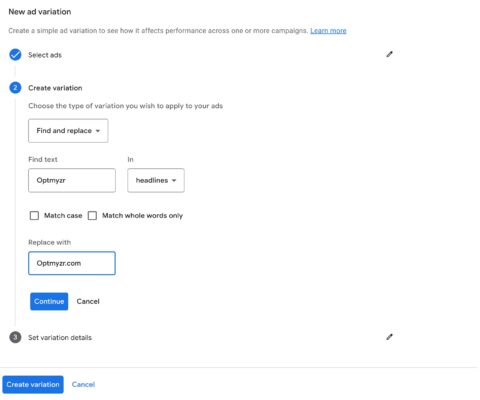

The third and final type of ad test supported in Ad Variations is to test what would happen if an asset was changed.

This type of experiment lends itself to testing more subtle changes.

For example, what would be the impact of saying “10% discount” rather than “save 10% today.”

Both are the same offer but expressed differently.

-

Image from Google Ads, June 2022

Image from Google Ads, June 2022

Measurement

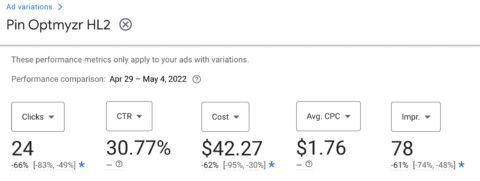

Ad Variation experiments automatically come with proper measurement.

For example, here you see the results of a test we ran with pinning.

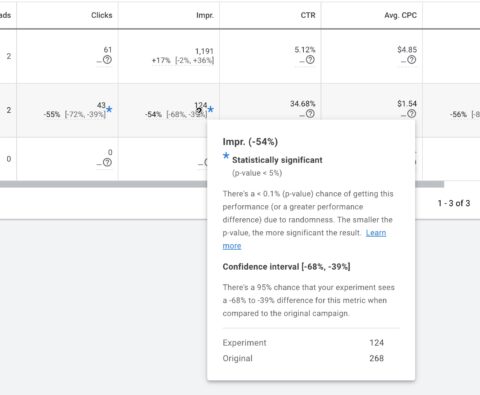

Statistically significant results are marked with an asterisk.

Image from Google Ads, June 2022

Image from Google Ads, June 2022When you hover over the stats, more details are revealed that explain the confidence levels of the experiments.

Image from Google Ads, June 2022

Image from Google Ads, June 2022From there, it’s a simple matter of a single click to promote winning tests to become part of your RSAs.

Something to note is that these Ad Variation tests are intended to be done at the campaign level or higher (cross campaign).

Currently, it is not possible to run an ad test for a single RSA or in a single ad group. Google has said they are aware of this limitation and are working towards a solution.

Conclusion

As ad formats in Google have changed, it’s time to also change how we do ad testing.

Ad Variations are an easy way built right into Google Ads to create experiments that work with assets rather than entire ads and you can even test pinning.

Optmyzr’s most recent RSA study showed impressions now depend as much on having good ads as they depend on having good keywords, so working towards ads that have the right combination of not only CTR and conversion rate but also lots of high-quality impressions is the modern way to optimize PPC ads.

More resources:

Featured Image: Imagentle/Shutterstock