YouTube has announced the introduction of a new tool in Creator Studio that requires creators to disclose when their videos contain realistic content generated or altered by AI.

As stated in YouTube’s announcement:

“Viewers increasingly want more transparency about whether the content they’re seeing is altered or synthetic.”

To address this growing demand, YouTube will now mandate that creators inform their audience when a video features content that could easily be mistaken for a real person, place, or event but has been created or modified using generative AI or other synthetic media.

Disclosure Labels & Requirements

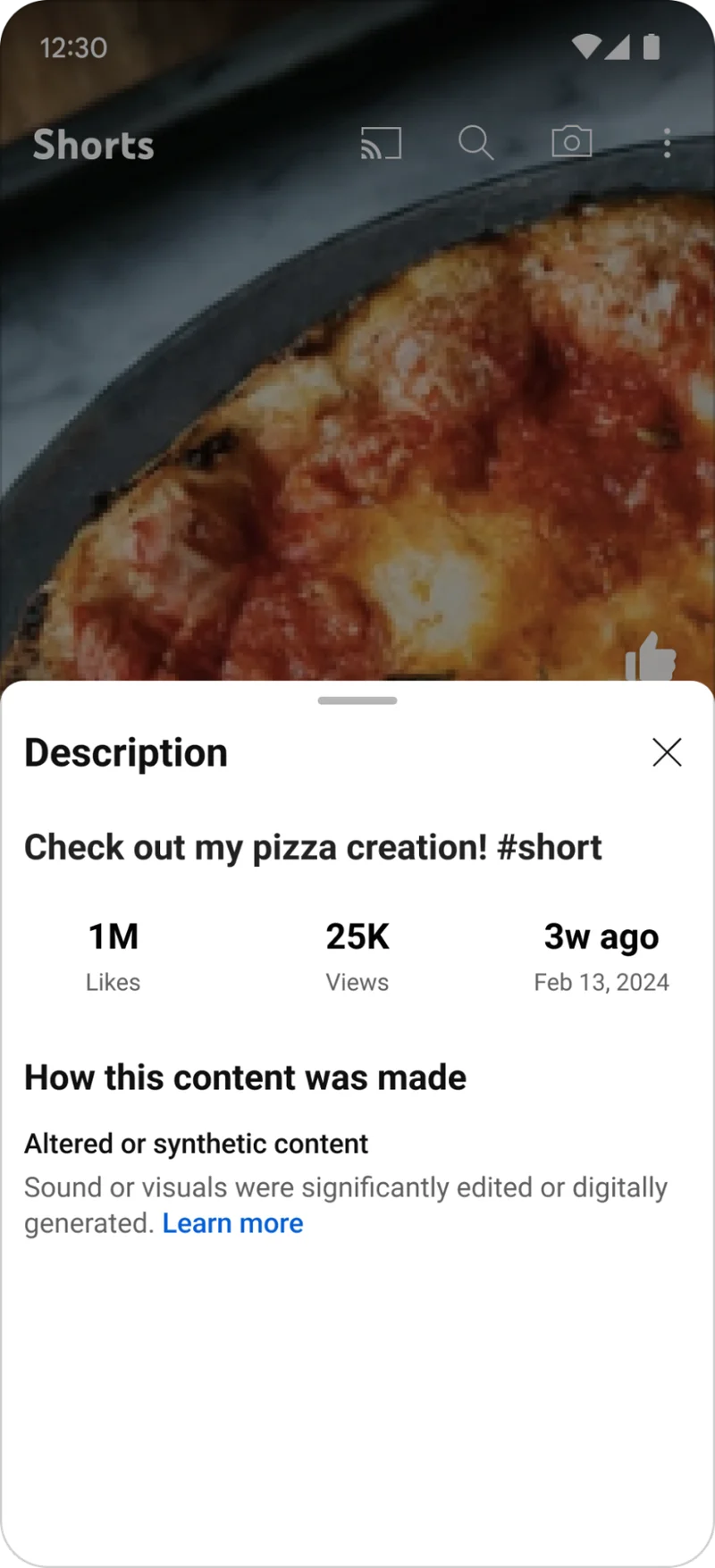

Disclosures will appear as labels in the expanded description or directly on the video player.

Screenshot from: blog.youtube/news-and-events/disclosing-ai-generated-content/, March 2024.

Screenshot from: blog.youtube/news-and-events/disclosing-ai-generated-content/, March 2024. Screenshot from: blog.youtube/news-and-events/disclosing-ai-generated-content/, March 2024.

Screenshot from: blog.youtube/news-and-events/disclosing-ai-generated-content/, March 2024.YouTube believes this new label will “strengthen transparency with viewers and build trust between creators and their audience.”

Examples of content that will require disclosure include:

- Digitally altering a video to replace one person’s face with another’s.

- Synthetically generating a person’s voice for narration.

- Altering footage of actual events or places to make them appear different from reality.

- Generating realistic scenes depicting fictional significant events.

Exceptions To Disclosure

YouTube acknowledges that creators use generative AI in various ways throughout the creative process and will not require disclosure when AI is used for productivity purposes, such as generating scripts, content ideas, or automatic captions.

Additionally, disclosure will not be necessary for unrealistic or inconsequential changes, such as color adjustments, lighting filters, special effects, or beauty filters.

Rollout & Enforcement

The labels will be rolled out across all YouTube surfaces and formats in the coming weeks, starting with the YouTube app on mobile devices and eventually expanding to desktop and TV.

While creators will be given time to adjust to the new process, YouTube may consider enforcement measures in the future for those who consistently fail to disclose the use of AI-generated content.

In some cases, particularly when the altered or synthetic content has the potential to confuse or mislead people, YouTube may add a label even if the creator has not disclosed it themselves.

YouTube is working on updating its privacy process to allow individuals to request the removal of AI-generated or synthetic content that simulates an identifiable person, including their face or voice.

In Summary

As viewers demand more transparency, marketers must be upfront about using AI-generated content to remain in good standing on YouTube.

While AI can be a powerful tool for content creation, marketers should strive to balance leveraging AI’s capabilities and maintaining a human touch.

FAQ

How does YouTube’s new policy on AI-generated content disclosure impact content creators?

- The policy mandates content creators to disclose if their videos include AI-generated or significantly altered content that mimics real-life scenarios.

- Disclosure labels will be added in video descriptions or directly on the player.

- Failure to comply with disclosure requirements could result in YouTube taking enforcement measures.

What are the exceptions to YouTube’s mandatory AI content disclosure requirements?

- AI used to produce unrealistic or animated content, including special effects and production assistance, is exempt from disclosure.

- This also applies to minor adjustments like color correction, lighting filters, or beautification enhancements.

What actions will YouTube take if creators do not disclose AI-generated content?

- In cases where AI-generated content could mislead viewers, YouTube may add a disclosure label if the creator hasn’t.

- Creators will have a grace period to adjust to the new policy, but persistent non-compliance may lead to enforcement measures.

- YouTube is refining its privacy guidelines to allow individuals to request the removal of AI-generated content that uses their likeness without consent.

Featured Image: Muhammad Alimaki/Shutterstock