This post was sponsored by JetOctopus. The opinions expressed in this article are the sponsor’s own.

If you manage a large website with over 10,000 pages, you can likely appreciate the unique SEO challenges that come with such scale.

Sure, the traditional tools and tactics — keyword optimization, link building, etc. — are important to establish a strong foundation and maintain basic SEO hygiene.

However, they may not fully address the technical complexities of Site Visibility for Searchbots and the dynamic needs of a large enterprise website.

This is where log analyzers become crucial. An SEO log analyzer monitors and analyzes server access logs to give you real insights into how search engines interact with your website. It allows you to take strategic action that satisfies both search crawlers and users, leading to stronger returns on your efforts.

In this post, you’ll learn what a log analyzer is and how it can enable your enterprise SEO strategy to achieve sustained success. But first, let’s take a quick look at what makes SEO tricky for big websites with thousands of pages.

The Unique SEO Challenges For Large Websites

Managing SEO for a website with over 10,000 pages isn’t just a step up in scale; it’s a whole different ball game.

Relying on traditional SEO tactics limits your site’s potential for organic growth. You can have the best titles and content on your pages, but if Googlebot can’t crawl them effectively, those pages will be ignored and may not get ranked ever.

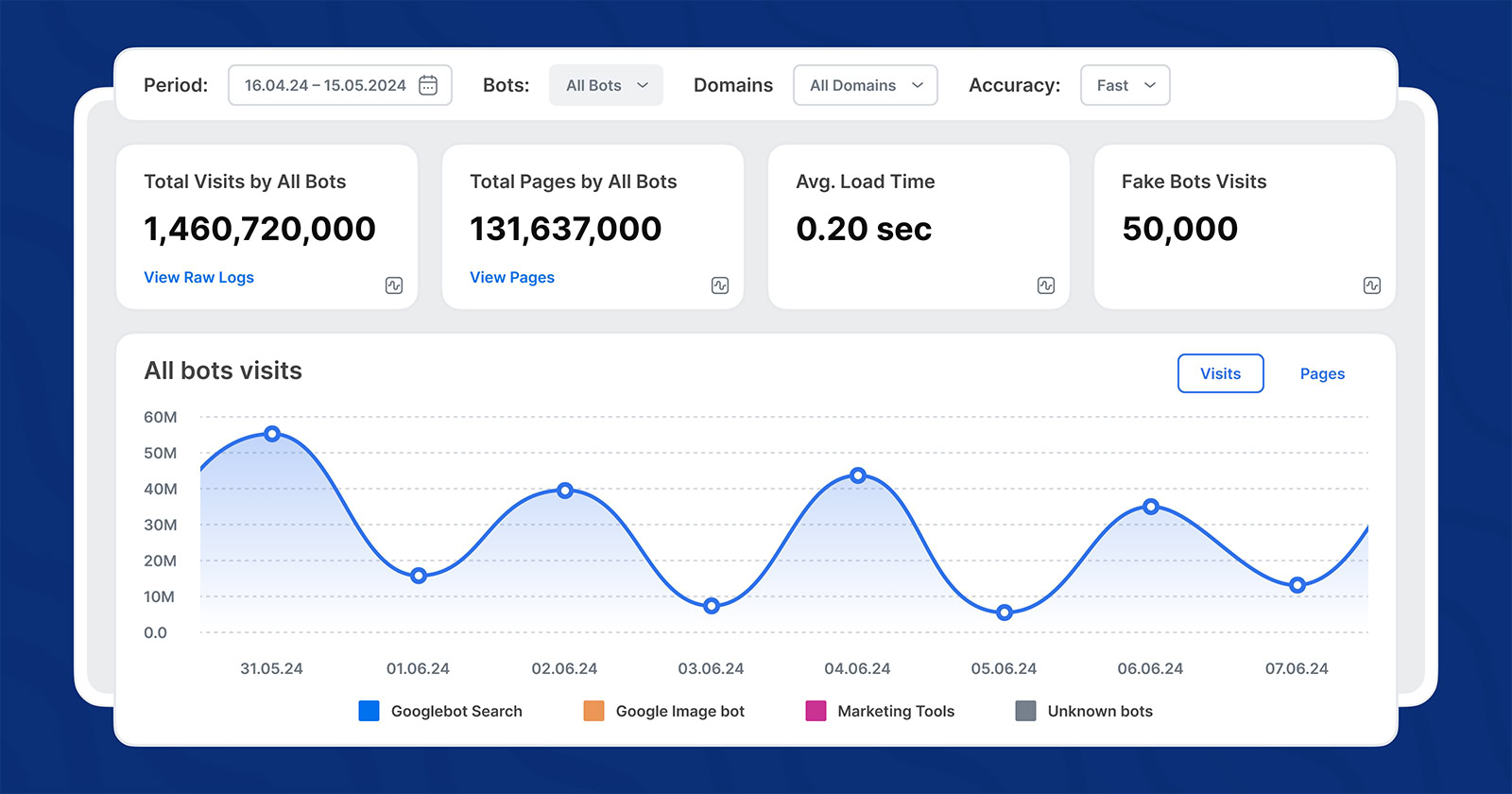

Image created by JetOctopus, May 2024

Image created by JetOctopus, May 2024For big websites, the sheer volume of content and pages makes it difficult to ensure every (important) page is optimized for visibility to Googlebot. Then, the added complexity of an elaborate site architecture often leads to significant crawl budget issues. This means Googlebot is missing crucial pages during its crawls.

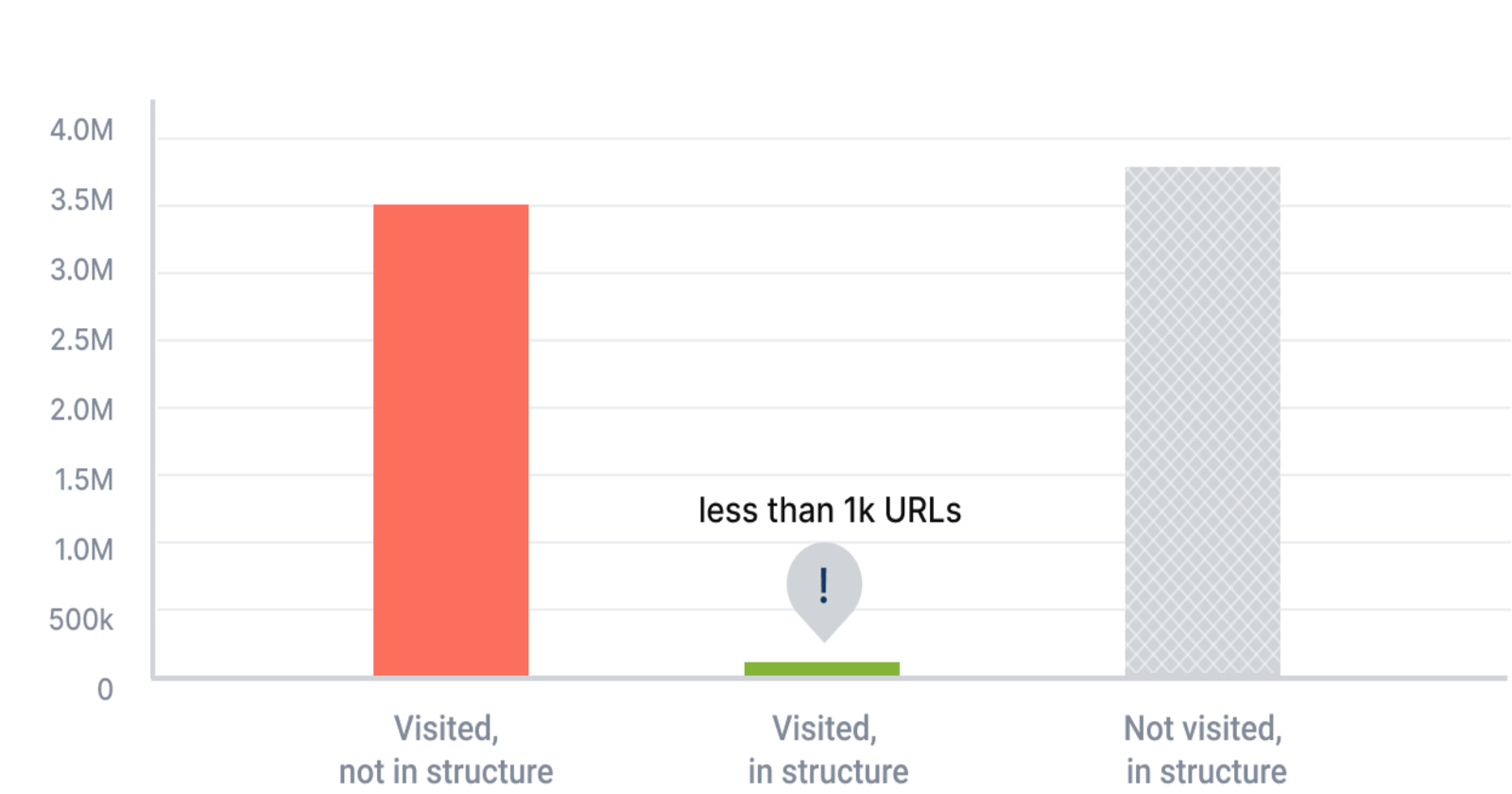

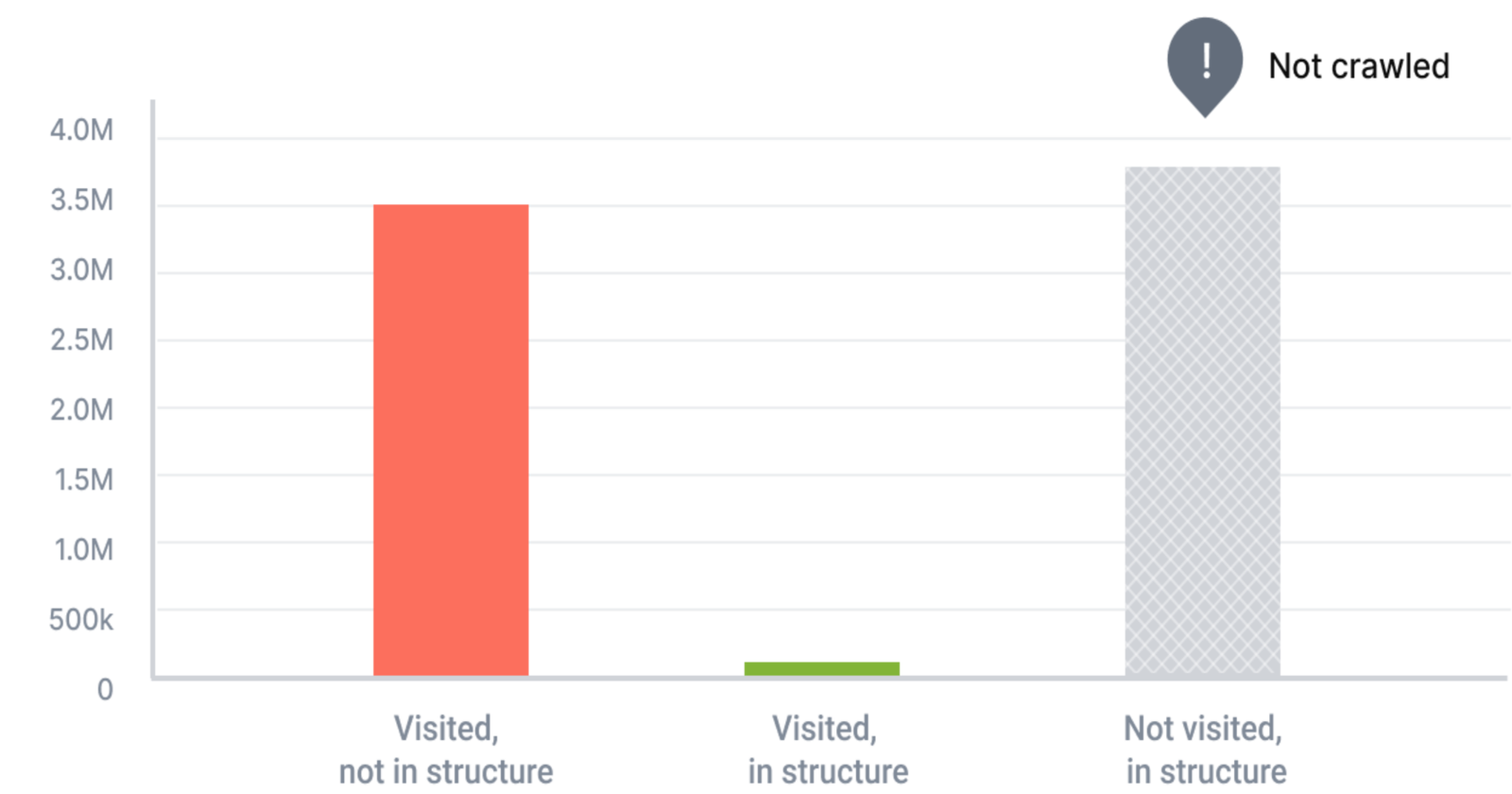

Image created by JetOctopus, May 2024

Image created by JetOctopus, May 2024Furthermore, big websites are more vulnerable to technical glitches — such as unexpected tweaks in the code from the dev team — that can impact SEO. This often exacerbates other issues like slow page speeds due to heavy content, broken links in bulk, or redundant pages that compete for the same keywords (keyword cannibalization).

All in all, these issues that come with size necessitate a more robust approach to SEO. One that can adapt to the dynamic nature of big websites and ensure that every optimization effort is more meaningful toward the ultimate goal of improving visibility and driving traffic.

This strategic shift is where the power of an SEO log analyzer becomes evident, providing granular insights that help prioritize high-impact actions. The primary action being to better understand Googlebot like it’s your website’s main user — until your important pages are accessed by Googlebot, they won’t rank and drive traffic.

What Is An SEO Log Analyzer?

An SEO log analyzer is essentially a tool that processes and analyzes the data generated by web servers every time a page is requested. It tracks how search engine crawlers interact with a website, providing crucial insights into what happens behind the scenes. A log analyzer can identify which pages are crawled, how often, and whether any crawl issues occur, such as Googlebot being unable to access important pages.

By analyzing these server logs, log analyzers help SEO teams understand how a website is actually seen by search engines. This enables them to make precise adjustments to enhance site performance, boost crawl efficiency, and ultimately improve SERP visibility.

Put simply, a deep dive into the logs data helps discover opportunities and pinpoint issues that might otherwise go unnoticed in large websites.

But why exactly should you focus your efforts on treating Googlebot as your most important visitor?

Why is crawl budget a big deal?

Let’s look into this.

Optimizing Crawl Budget For Maximum SEO Impact

Crawl budget refers to the number of pages a search engine bot — like Googlebot — will crawl on your site within a given timeframe. Once a site’s budget is used up, the bot will stop crawling and move on to other websites.

Crawl budgets vary for every website and your site’s budget is determined by Google itself, by considering a range of factors such as the site’s size, performance, frequency of updates, and links. When you focus on optimizing these factors strategically, you can increase your crawl budget and speed up ranking for new website pages and content.

As you’d expect, making the most of this budget ensures that your most important pages are frequently visited and indexed by Googlebot. This typically translates into better rankings (provided your content and user experience are solid).

And here’s where a log analyzer tool makes itself particularly useful by providing detailed insights into how crawlers interact with your site. As mentioned earlier, it allows you to see which pages are being crawled and how often, helping identify and resolve inefficiencies such as low-value or irrelevant pages that are wasting valuable crawl resources.

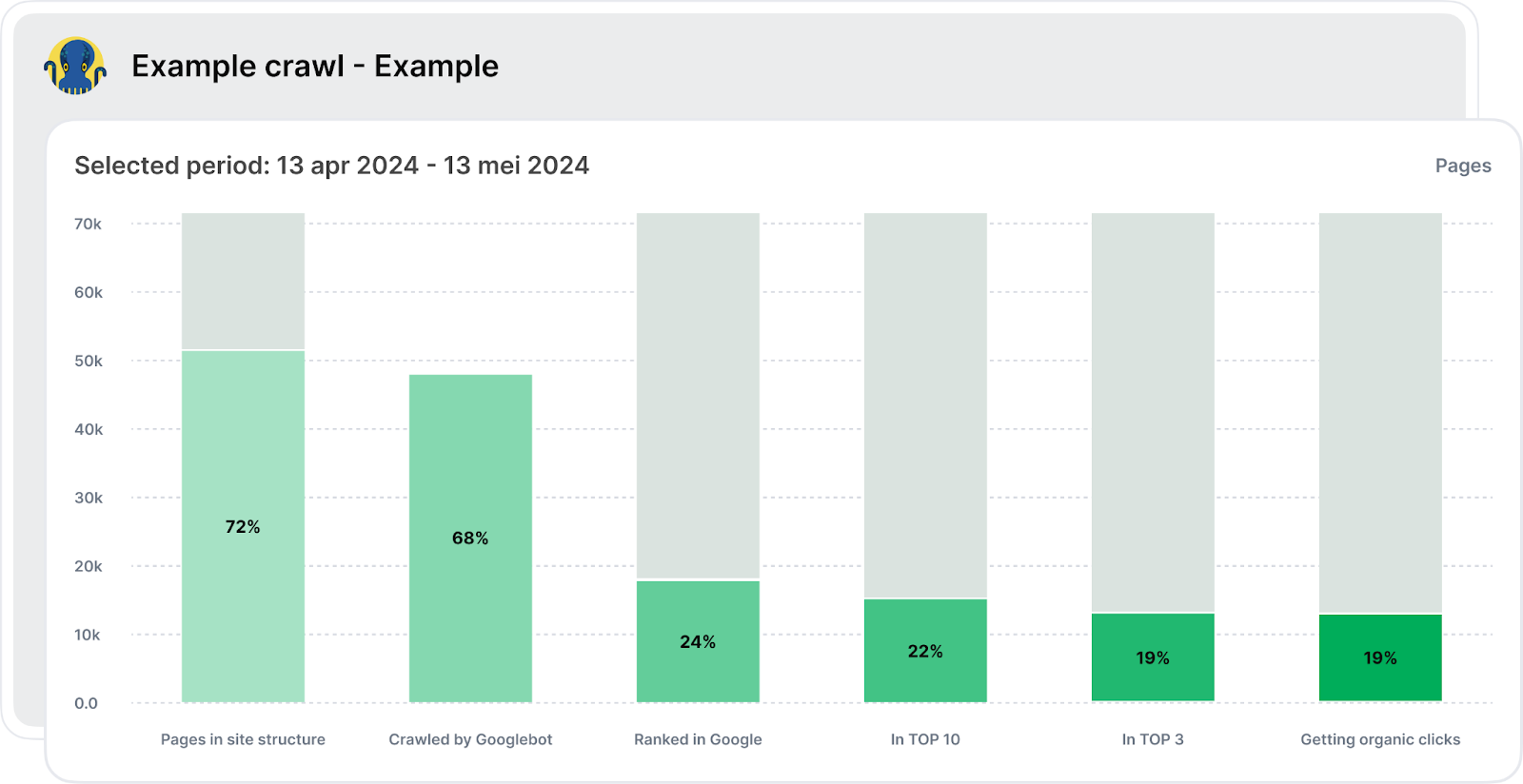

An advanced log analyzer like JetOctopus offers a complete view of all the stages from crawling and indexation to getting organic clicks. Its SEO Funnel covers all the main stages, from your website being visited by Googlebot to being ranked in the top 10 and bringing in organic traffic.

Image created by JetOctopus, May 2024

Image created by JetOctopus, May 2024As you can see above, the tabular view shows how many pages are open to indexation versus those closed from indexation. Understanding this ratio is crucial because if commercially important pages are closed from indexation, they will not appear in subsequent funnel stages.

The next stage examines the number of pages crawled by Googlebot, with “green pages” representing those crawled and within the structure, and “gray pages” indicating potential crawl budget waste because they are visited by Googlebot but not within the structure, possibly orphan pages or accidentally excluded from the structure. Hence, it’s vital to analyze this part of your crawl budget for optimization.

The later stages include analyzing what percentage of pages are ranked in Google SERPs, how many of these rankings are in the top 10 or top three, and, finally, the number of pages receiving organic clicks.

Overall, the SEO funnel gives you concrete numbers, with links to lists of URLs for further analysis, such as indexable vs. non-indexable pages and how crawl budget waste is occurring. It is an excellent starting point for crawl budget analysis, allowing a way to visualize the big picture and get insights for an impactful optimization plan that drives tangible SEO growth.

Put simply, by prioritizing high-value pages — ensuring they are free from errors and easily accessible to search bots — you can greatly improve your site’s visibility and ranking.

Using an SEO log analyzer, you can understand exactly what should be optimized on pages that are being ignored by crawlers, work on them, and thus attract Googlebot visits. A log analyzer benefits in optimizing other crucial aspects of your website:

Image created by JetOctopus, May 2024

Image created by JetOctopus, May 2024- Detailed Analysis of Bot Behavior: Log analyzers allow you to dissect how search bots interact with your site by examining factors like the depth of their crawl, the number of internal links on a page, and the word count per page. This detailed analysis provides you with the exact to-do items for optimizing your site’s SEO performance.

- Improves Internal Linking and Technical Performance: Log analyzers provide detailed insights into the structure and health of your site. They help identify underperforming pages and optimize the internal links placement, ensuring a smoother user and crawler navigation. They also facilitate the fine-tuning of content to better meet SEO standards, while highlighting technical issues that may affect site speed and accessibility.

- Aids in Troubleshooting JavaScript and Indexation Challenges: Big websites, especially eCommerce, often rely heavily on JavaScript for dynamic content. In the case of JS websites, the crawling process is lengthy. A log analyzer can track how well search engine bots are able to render and index JavaScript-dependent content, underlining potential pitfalls in real-time. It also identifies pages that are not being indexed as intended, allowing for timely corrections to ensure all relevant content can rank.

- Helps Optimize Distance from Index (DFI): The concept of Distance from Index (DFI) refers to the number of clicks required to reach any given page from the home page. A lower DFI is generally better for SEO as it means important content is easier to find, both by users and search engine crawlers. Log analyzers help map out the navigational structure of your site, suggesting changes that can reduce DFI and improve the overall accessibility of key content and product pages.

Besides, historical log data offered by a log analyzer can be invaluable. It helps make your SEO performance not only understandable but also predictable. Analyzing past interactions allows you to spot trends, anticipate future hiccups, and plan more effective SEO strategies.

With JetOctopus, you benefit from no volume limits on logs, enabling comprehensive analysis without the fear of missing out on crucial data. This approach is fundamental in continually refining your strategy and securing your site’s top spot in the fast-evolving landscape of search.

Real-World Wins Using Log Analyzer

Big websites in various industries have leveraged log analyzers to attain and maintain top spots on Google for profitable keywords, which has significantly contributed to their business growth.

For example, Skroutz, Greece’s biggest marketplace website with over 1 million sessions daily, set up a real-time crawl and log analyzer tool that helped them know things like:

- Does Googlebot crawl pages that have more than two filters activated?

- How extensively does Googlebot crawl a particularly popular category?

- What are the main URL parameters that Googlebot crawls?

- Does Googlebot visit pages with filters like “Size,” which are typically marked as nofollow?

This ability to see real-time visualization tables and historical log data spanning over ten months for monitoring Googlebot crawls effectively enabled Skroutz to find crawling loopholes and decrease index size, thus optimizing its crawl budget.

Eventually, they also saw a reduced time for new URLs to be indexed and ranked — instead of taking 2-3 months to index and rank new URLs, the indexing and ranking phase took only a few days.

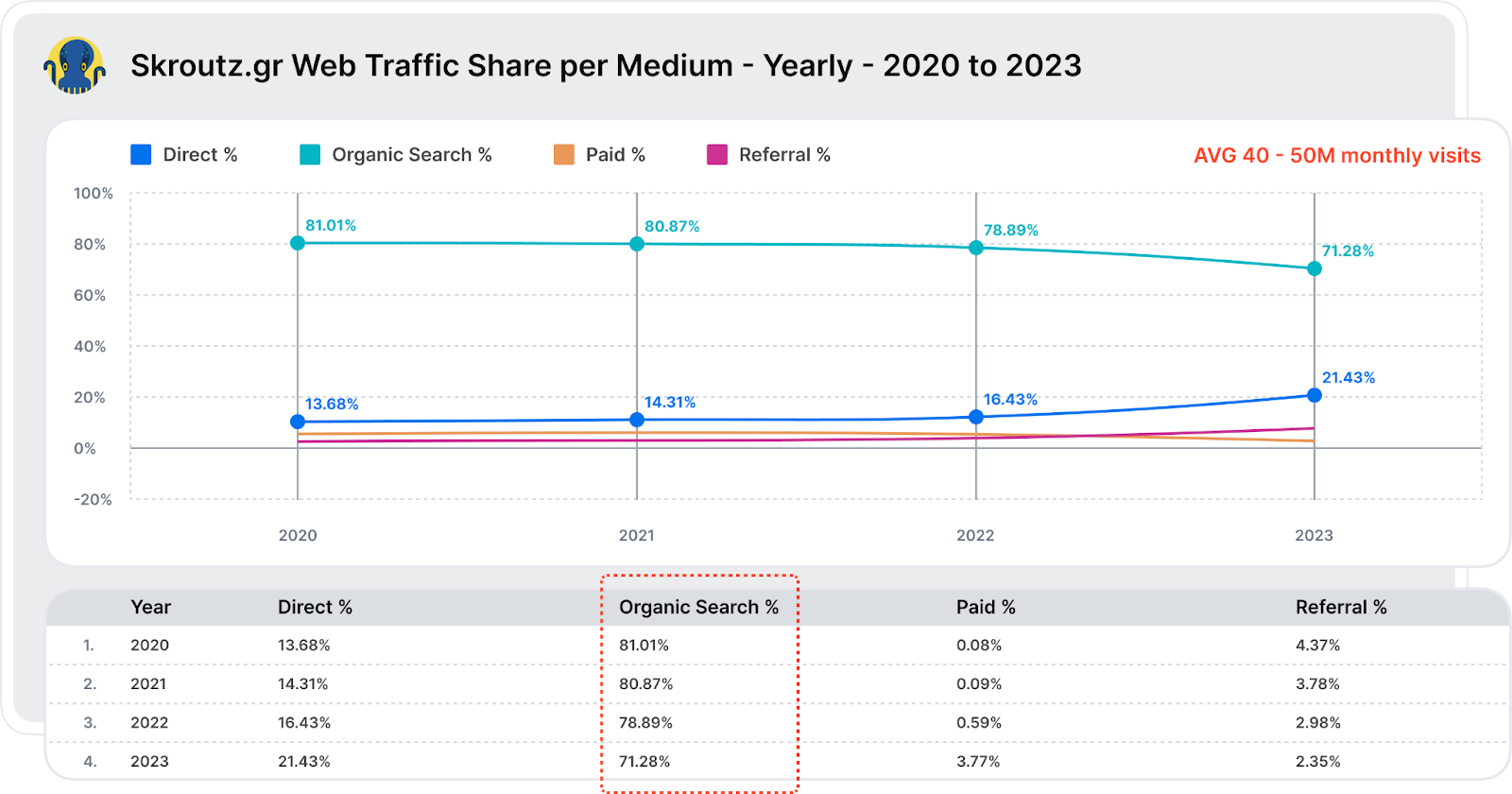

This strategic approach to technical SEO using log files has helped Skroutz cement its position as one of the top 1000 websites globally according to SimilarWeb, and the fourth most visited website in Greece (after Google, Facebook, and Youtube) with over 70% share of its traffic from organic search.

Image created by JetOctopus, May 2024

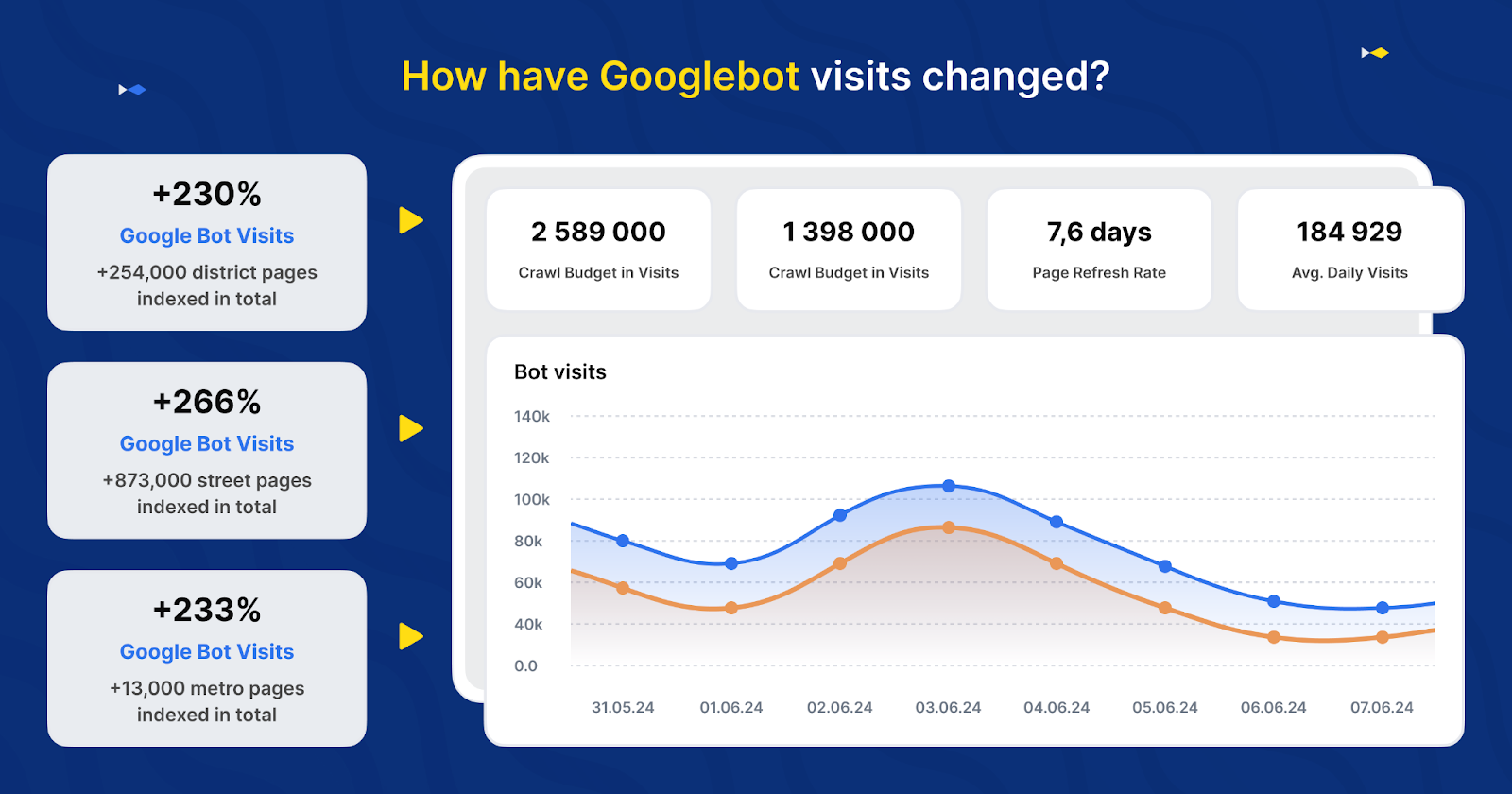

Image created by JetOctopus, May 2024Another case in point is DOM.RIA, Ukraine’s popular real estate and rental listing website, which doubled the Googlebot visits by optimizing their website’s crawl efficiency. As their site structure is huge and elaborate, they needed to optimize the crawl efficiency for Googlebot to ensure the freshness and relevance of content appearing in Google.

Initially, they implemented a new sitemap to improve the indexing of deeper directories. Despite these efforts, Googlebot visits remained low.

By using the JetOctopus to analyze their log files, DOM.RIA identified and addressed issues with their internal linking and DFI. They then created mini-sitemaps for poorly scanned directories (such as for the city, including URLs for streets, districts, metro, etc.) while assigning meta tags with links to pages that Googlebot often visits. This strategic change resulted in a more than twofold increase in Googlebot activity on these crucial pages within two weeks.

Image created by JetOctopus, May 2024

Image created by JetOctopus, May 2024Getting Started With An SEO Log Analyzer

Now that you know what a log analyzer is and what it can do for big websites, let’s take a quick look at the steps involved in logs analysis.

Here is an overview of using an SEO log analyzer like JetOctopus for your website:

- Integrate Your Logs: Begin by integrating your server logs with a log analysis tool. This step is crucial for capturing all data related to site visits, which includes every request made to the server.

- Identify Key Issues: Use the log analyzer to uncover significant issues such as server errors (5xx), slow load times, and other anomalies that could be affecting user experience and site performance. This step involves filtering and sorting through large volumes of data to focus on high-impact problems.

- Fix the Issues: Once problems are identified, prioritize and address these issues to improve site reliability and performance. This might involve fixing broken links, optimizing slow-loading pages, and correcting server errors.

- Combine with Crawl Analysis: Merge log analysis data with crawl data. This integration allows for a deeper dive into crawl budget analysis and optimization. Analyze how search engines crawl your site and adjust your SEO strategy to ensure that your most valuable pages receive adequate attention from search bots.

And that’s how you can ensure that search engines are efficiently indexing your most important content.

Conclusion

As you can see, the strategic use of log analyzers is more than just a technical necessity for large-scale websites. Optimizing your site’s crawl efficiency with a log analyzer can immensely impact your SERP visibility.

For CMOs managing large-scale websites, embracing a log analyzer and crawler toolkit like JetOctopus is like getting an extra tech SEO analyst that bridges the gap between SEO data integration and organic traffic growth.

Image Credits

Featured Image: Image by JetOctopus Used with permission.