[ad_1]

Google announced a breakthrough technology called CALM that speeds up large language models (like GPT-3 and LaMDA) without compromising performance levels.

Larger Training Data Is Better But Comes With a Cost

Large Language Models (LLMs) train on large amounts of data.

Training the language models on larger amounts of data results in the model learning new abilities that aren’t always planned for.

For example, adding more training data to a language model can unexpectedly result in it gaining the ability to translate between different languages, even though it wasn’t trained to do that.

These new abilities are called emergent abilities, abilities that aren’t necessarily planned for.

A different research paper (PDF) about emergent abilities states:

“Although there are dozens of examples of emergent abilities, there are currently few compelling explanations for why such abilities emerge in the way they do.”

They can’t explain why different abilities are learned.

But it’s well known that scaling up the amount of data for training the machine allows it to gain more abilities.

The downside of scaling up the training data is that it takes more computational power to produce an output, which makes the AI slower at the time it is generating a text output (a moment that is called the “inference time”).

So the trade-off with making an AI smarter with more data is that the AI also becomes slower at inference time.

Google’s new research paper (Confident Adaptive Language Modeling PDF) describes the problem like this:

“Recent advances in Transformer-based large language models (LLMs) have led to significant performance improvements across many tasks.

These gains come with a drastic increase in the models’ size, potentially leading to slow and costly use at inference time.”

Confident Adaptive Language Modeling (CALM)

Researchers at Google came upon an interesting solution for speeding up the language models while also maintaining high performance.

The solution, to make an analogy, is somewhat like the difference between answering an easy question and solving a more difficult one.

An easy question, like what color is the sky, can be answered with little thought.

But a hard answer requires one to stop and think a little more to find the answer.

Computationally, large language models don’t make a distinction between a hard part of a text generation task and an easy part.

They generate text for both the easy and difficult parts using their full computing power at inference time.

Google’s solution is called Confident Adaptive Language Modeling (CALM).

What this new framework does is to devote less resources to trivial portions of a text generation task and devote the full power for more difficult parts.

The research paper on CALM states the problem and solution like this:

“Recent advances in Transformer-based large language models (LLMs) have led to significant performance improvements across many tasks.

These gains come with a drastic increase in the models’ size, potentially leading to slow and costly use at inference time.

In practice, however, the series of generations made by LLMs is composed of varying levels of difficulty.

While certain predictions truly benefit from the models’ full capacity, other continuations are more trivial and can be solved with reduced compute.

…While large models do better in general, the same amount of computation may not be required for every input to achieve similar performance (e.g., depending on if the input is easy or hard).”

What is Google CALM and Does it Work?

CALM works by dynamically allocating resources depending on the complexity of the individual part of the task, using an algorithm to predict whether something needs full or partial resources.

The research paper shares that they tested the new system for various natural language processing tasks (“text summarization, machine translation, and question answering”) and discovered that they were able to speed up the inference by about a factor of three (300%).

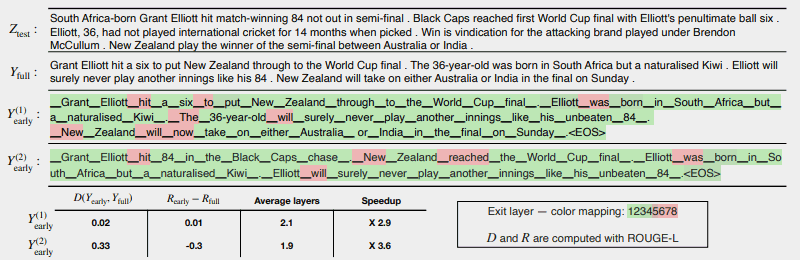

The following illustration shows how well the CALM system works.

The few areas in red indicate where the machine had to use its full capacity on that section of the task.

The areas in green are where the machine only used less than half capacity.

Red = Full Capacity/Green = Less Than Half Capacity

This is what the research paper says about the above illustration:

“CALM accelerates the generation by early exiting when possible, and selectively using the full decoder’s capacity only for few tokens, demonstrated here on a CNN/DM example with softmax-based confidence measure. Y (1) early and Y (2) early use different confidence thresholds for early exiting.

Bellow (sic) the text, we report the measured textual and risk consistency of each of the two outputs, along with efficiency gains.

The colors represent the number of decoding layers used for each token—light green shades indicate less than half of the total layers.

Only a few selected tokens use the full capacity of the model (colored in red), while for most tokens the model exits after one or few decoding layers (colored in green).”

The researchers concluded the paper by noting that implementing CALM requires only minimal modifications in order to adapt a large language model to become faster.

This research is important because it opens the door to creating more complex AI models that are trained on substantially larger data sets without experiencing slower speed while maintaining a high performance level.

Yet it may be possible that this method can also benefit large language models that are trained on less data as well.

For example, InstructGPT models, of which ChatGPT is a sibling model, are trained on approximately 1.3 billion parameters but are still able to outperform models that are trained on substantially more parameters.

The researchers noted in the conclusion:

“Overall, our complete adaptive compute framework for LMs requires minimal modifications to the underlying model and enables efficiency gains while satisfying rigorous quality guarantees for the output.”

This information about this research paper was just published on Google’s AI blog on December 16, 2022. The research paper itself is dated October 25, 2022.

It will be interesting to see if this technology makes it way into large language models of the near future.

Read Google’s blog post:

Accelerating Text Generation with Confident Adaptive Language Modeling (CALM)

Read the Research Paper:

Confident Adaptive Language Modeling (PDF)

Featured image by Shutterstock/Master1305

[ad_2]

Source link