OpenAI CEO Sam Altman responded to a request by the Federal Trade Commission as part of an investigation to determine if the company “engaged in unfair or deceptive” practices relating to privacy, data security, and risks of consumer harm, particularly related to reputation.

it is very disappointing to see the FTC’s request start with a leak and does not help build trust.

that said, it’s super important to us that out technology is safe and pro-consumer, and we are confident we follow the law. of course we will work with the FTC.

— Sam Altman (@sama) July 13, 2023

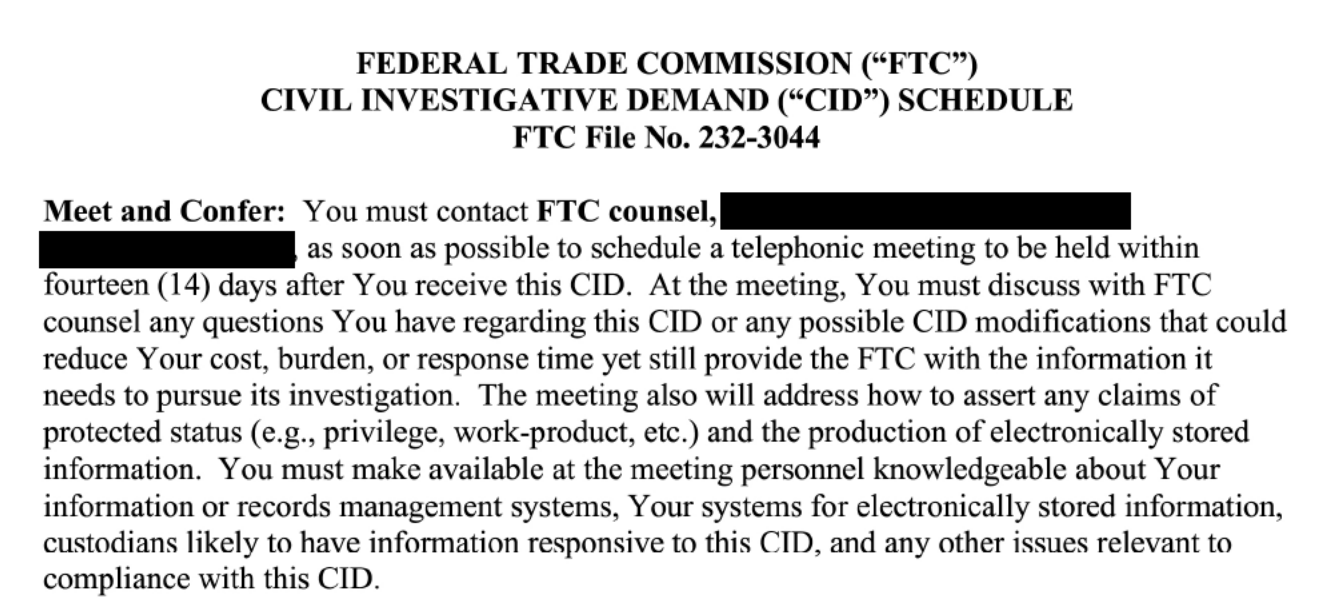

The FTC has requested information from OpenAI dating back to June 2020, as revealed in a leaked document obtained by the Washington Post.

Screenshot from Washington Post, July 2023

Screenshot from Washington Post, July 2023The subject of investigation: did OpenAI violate Section 5 of the FTC Act?

The documentation OpenAI must provide should include details about large language model (LLM) training, refinement, reinforcement through human feedback, response reliability, and policies and practices surrounding consumer privacy, security, and risk mitigation.

we’re transparent about the limitations of our technology, especially when we fall short. and our capped-profits structure means we aren’t incentivized to make unlimited returns.

— Sam Altman (@sama) July 13, 2023

The FTC’s Growing Concern Over Generative AI

The investigation into a major AI company’s practices comes as no surprise. The FTC’s interest in generative AI risks has been growing since ChatGPT skyrocketed into popularity.

Attention To Automated Decision-Making Technology

In April 2021, the FTC published guidance on artificial intelligence (AI) and algorithms, warning companies to ensure their AI systems comply with consumer protection laws.

It noted Section 5 of the FTC Act, the Fair Credit Reporting Act, and the Equal Credit Opportunity Act as laws important to AI developers and users.

FTC cautioned that algorithms built on biased data or flawed logic could lead to discriminatory outcomes, even if unintended.

The FTC outlined best practices for ethical AI development based on its experience enforcing laws against unfair practices, deception, and discrimination.

Recommendations include testing systems for bias, enabling independent audits, limiting overstated marketing claims, and weighing societal harm versus benefits.

“If your algorithm results in credit discrimination against a protected class, you could find yourself facing a complaint alleging violations of the FTC Act and ECOA,” the guidance warns.

AI In Check

The FTC reminded AI companies about its AI guidance from 2021 in regards to making exaggerated or unsubstantiated marketing claims regarding AI capabilities.

In the post from February 2023, the organization warned marketers against getting swept up in AI hype and making promises their products cannot deliver.

Common issues cited: claiming that AI can do more than current technology allows, making unsupported comparisons to non-AI products, and failing to test for risks and biases.

The FTC stressed that false or deceptive marketing constitutes illegal conduct regardless of the complexity of the technology.

The reminder came a few weeks after OpenAI’s ChatGPT reached 100 million users.

The also FTC posted a job listing for technologists around this time – including those with AI expertise – to support investigations, policy initiatives, and research on consumer protection and competition in the tech industry.

Deepfakes And Deception

About a month later, in March, the FTC warned that generative AI tools like chatbots and deepfakes could facilitate widespread fraud if deployed irresponsibly.

It cautioned developers and companies using synthetic media and generative AI to consider the inherent risks of misuse.

The agency said bad actors can leverage the realistic but fake content from these AI systems for phishing scams, identity theft, extortion, and other harm.

While some uses may be beneficial, the FTC urged firms to weigh making or selling such AI tools given foreseeable criminal exploitation.

The FTC advised that companies continuing to develop or use generative AI should take robust precautions to prevent abuse.

It also warned against using synthetic media in misleading marketing and failing to disclose when consumers interact with AI chatbots versus real people.

AI Spreads Malicious Software

In April, the FTC revealed how cybercriminals exploited interest in AI to spread malware through fake ads.

The bogus ads promoted AI tools and other software on social media and search engines.

Clicking these ads lead users to cloned sites that download malware or exploit backdoors to infect devices undetected. The stolen information was then sold on the dark web or used to access victims’ online accounts.

To avoid being hacked, the FTC advised not clicking on software ads.

If infected, users should update security tools and operating systems, then follow steps to remove malware or recover compromised accounts.

The FTC cautioned the public to be wary of cybercriminals growing more sophisticated at spreading malware through advertising networks.

Federal Agencies Unite To Tackle AI Regulation

Near the end of April, four federal agencies – the Consumer Financial Protection Bureau (CFPB), the Department of Justice’s Civil Rights Division (DOJ), the Equal Employment Opportunity Commission (EEOC), and the FTC – released a statement on how they would monitor AI development and enforce laws against discrimination and bias in automated systems.

The agencies asserted authority over AI under existing laws on civil rights, fair lending, equal opportunity, and consumer protection.

Together, they warned AI systems could perpetuate unlawful bias due to flawed data, opaque models, and improper design choices.

The partnership aimed to promote responsible AI innovation that increases consumer access, quality, and efficiency without violating longstanding protections.

AI And Consumer Trust

In May, the FTC warned companies against using new generative AI tools like chatbots to manipulate consumer decisions unfairly.

After describing events from the movie Ex Machina, the FTC claimed that human-like persuasion of AI chatbots could steer people into harmful choices about finances, health, education, housing, and jobs.

Though not necessarily intentional, the FTC said design elements that exploit human trust in machines to trick consumers constitute unfair and deceptive practices under FTC law.

The agency advised firms to avoid over-anthropomorphizing chatbots and ensure disclosures on paid promotions woven into AI interactions.

With generative AI adoption surging, the FTC alert puts companies on notice to proactively assess downstream societal impacts.

Those rushing tools to market without proper ethics review or protections would risk FTC action on resulting consumer harm.

An Opinion On The Risks Of AI

FTC Chair Lina Khan argued that generative AI poses risks of entrenching significant tech dominance, turbocharging fraud, and automating discrimination if unchecked.

In a New York Times op-ed published a few days after the consumer trust warning, Khan said the FTC aims to promote competition and protect consumers as AI expands.

Khan warned a few powerful companies controlled key AI inputs like data and computing, which could further their dominance absent antitrust vigilance.

She cautioned realistic fake content from generative AI could facilitate widespread scams. Additionally, biased data risks algorithms that unlawfully lock out people from opportunities.

While novel, Khan asserted AI systems are not exempt from FTC consumer protection and antitrust authorities. With responsible oversight, Khan noted that generative AI could grow equitably and competitively, avoiding the pitfalls of other tech giants.

AI And Data Privacy

In June, the FTC warned companies that consumer privacy protections apply equally to AI systems reliant on personal data.

In complaints against Amazon and Ring, the FTC alleged unfair and deceptive practices using voice and video data to train algorithms.

FTC Chair Khan said AI’s benefits don’t outweigh the privacy costs of invasive data collection.

The agency asserted consumers retain control over their information even if a company possesses it. Strict safeguards and access controls are expected when employees review sensitive biometric data.

For kids’ data, the FTC said it would fully enforce the children’s privacy law, COPPA. The complaints ordered the deletion ill-gotten biometric data and any AI models derived from it.

The message for tech firms was clear – while AI’s potential is vast, legal obligations around consumer privacy remain paramount.

Generative AI Competition

Near the end of June, the FTC issued guidance cautioning that the rapid growth of generative AI could raise competition concerns if key inputs come under the control of a few dominant technology firms.

The agency said essential inputs like data, talent, and computing resources are needed to develop cutting-edge generative AI models. The agency warned that if a handful of big tech companies gain too much control over these inputs, they could use that power to distort competition in generative AI markets.

The FTC cautioned that anti-competitive tactics like bundling, tying, exclusive deals, or buying up competitors could allow incumbents to box out emerging rivals and consolidate their lead.

The FTC said it will monitor competition issues surrounding generative AI and take action against unfair practices.

The aim was to enable entrepreneurs to innovate with transformative AI technologies, like chatbots, that could reshape consumer experiences across industries. With the right policies, the FTC believed emerging generative AI can yield its full economic potential.

Suspicious Marketing Claims

In early July, the FTC warned of AI tools that can generate deepfakes, cloned voices, and artificial text increase, so too have emerged tools claiming to detect such AI-generated content.

However, experts warned that the marketing claims made by some detection tools may overstate their capabilities.

The FTC cautioned companies against exaggerating their detection tools’ accuracy and reliability. Given the limitations of current technology, businesses should ensure marketing reflects realistic assessments of what these tools can and cannot do.

Furthermore, the FTC noted that users should be wary of claims that a tool can catch all AI fakes without errors. Imperfect detection could lead to unfairly accusing innocent people like job applicants of creating fake content.

What Will The FTC Discover?

The FTC’s investigation into OpenAI comes amid growing regulatory scrutiny of generative AI systems.

As these powerful technologies enable new capabilities like chatbots and deepfakes, they raise novel risks around bias, privacy, security, competition, and deception.

OpenAI must answer questions about whether it took adequate precautions in developing and releasing models like GPT-3 and DALL-E that have shaped the trajectory of the AI field.

The FTC appears focused on ensuring OpenAI’s practices align with consumer protection laws, especially regarding marketing claims, data practices, and mitigating societal harms.

How OpenAI responds and whether any enforcement actions arise could set significant precedents for regulation as AI advances.

For now, the FTC’s investigation underscores that the hype surrounding AI should not outpace responsible oversight.

Robust AI systems hold great promise but pose risks if deployed without sufficient safeguards.

Major AI companies must ensure new technologies comply with longstanding laws protecting consumers and markets.

Featured image: Ascannio/Shutterstock