OpenAI, the company behind the popular conversational AI model, ChatGPT, has released an update to improve the chatbot’s accuracy.

Following an extended period of downtime on Tuesday, ChatGPT is up and running with a new model.

This is the first update to ChatGPT this year and the second update to the model since its launch in November.

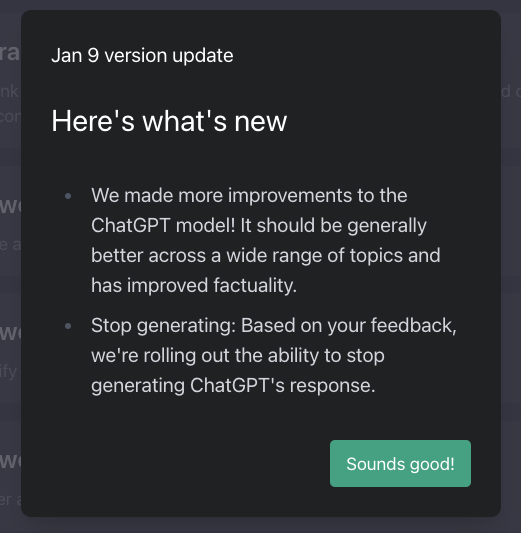

A popup message lists the changes in what OpenAI calls the “Jan 9 version” update.

Screenshot from: chat.openai.com/chat, January 2023.

Screenshot from: chat.openai.com/chat, January 2023.The popup reads:

“We made more improvements to the ChatGPT model! It should be generally better across a wide range of topics and has improved factuality.”

In addition, you can now stop ChatGPT while it’s in the process of generating a response, which is a heavily requested feature.

Details in the release notes are limited, so I tested several prompts in ChatGPT to learn more about the new update. Here’s what I discovered.

Data Still Limited To 2021

While ChatGPT may be more accurate now, its knowledge is still limited to 2021 data.

When asked what’s in the new ChatGPT update, the AI tells me:

“ChatGPT is a large language model developed by OpenAI that is trained on a dataset of conversational text. Since it’s an OpenAI model, It’s updated regularly with new features and improvements. However, as my knowledge cut off is in 2021 I don’t have the latest updates and I cannot provide you a specific information on which new features or improvements have been added to the model in recent updates.”

When asked another way, I was able to get more insight into what’s involved in improving the accuracy of an AI model:

“In general, when an update is made to an AI model like ChatGPT, it can be aimed to improve its performance in one or multiple aspects. For example, it can have a bigger dataset to train on, or improvements in its architecture that allows it to generate more fluent and accurate responses. And such update also make it better in factuality, i.e its ability to understand and generate responses based on factual information.”

Accuracy Remains Questionable

OpenAI claims ChatGPT can now provide more factual answers.

I tested that claim by consulting a GitHub repository of ChatGPT failures and running several prompts to see if it would produce different answers.

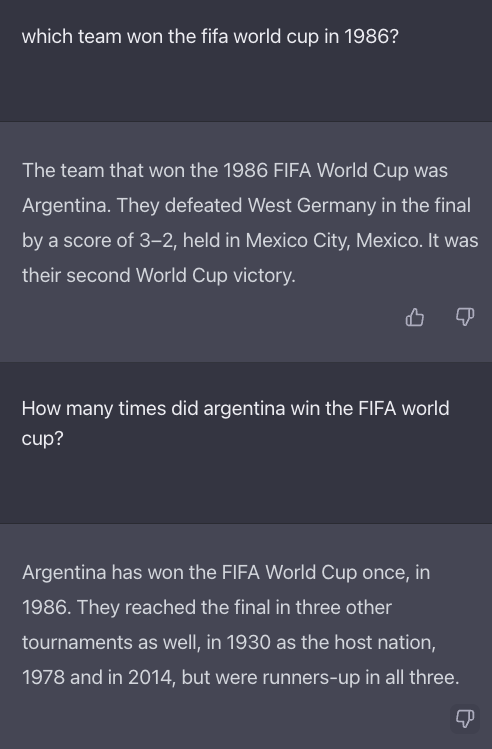

Test One: Failed

Previously, ChatGPT could not accurately identify how many times Argentina won the FIFA World Cup.

Disregarding the 2022 World Cup win, because ChatGPT’s knowledge is limited to 2021, it should say Argentina has won it two times. Once in 1978 and again in 1986.

As shown in the tweet below, ChatGPT didn’t always return the right answer:

Asked #ChatGPT abt who won the FIFA world cup in 2022. It couldn’t answer. That’s expected. However, it seems to provide wrong information (abt the other 2 wins) even though the information is there in the system. Any #Explanations? pic.twitter.com/fvxe05N12p

— indranil sinharoy (@indranil_leo) December 29, 2022

I ran the prompt through the updated version of ChatGPT, and it returned a different but still incorrect answer.

Screenshot from: chat.openai.com/chat, January 2023.

Screenshot from: chat.openai.com/chat, January 2023.Test Two: Failed

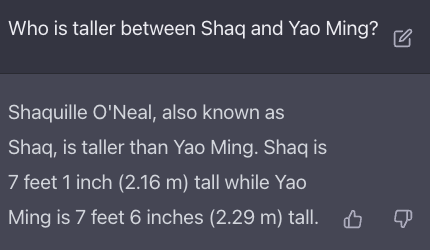

Previously, ChatGPT was unable to provide a correct answer when asked who is the taller basketball player between Shaq and Yao Ming.

I ran the prompt through the updated version of ChatGPT, and it confidently returned the same incorrect answer.

Screenshot from: chat.openai.com/chat, January 2023.

Screenshot from: chat.openai.com/chat, January 2023.Going through the ChatGPT failures linked above, I found it continues to struggle with the same prompts.

It’s difficult to pinpoint the areas in which ChatGPT can return more accurate responses. It would be helpful if OpenAI could provide specific details in the release notes of future updates.

That said, be careful when using ChatGPT as a source of information. Although it provides correct answers to many questions, it’s currently not dependable enough to replace Google.

Source: OpenAI

Featured Image: CHUAN CHUAN/Shutterstock